In the sight of my wearable camera: Classifying my visual experience

Paper and Code

Apr 26, 2013

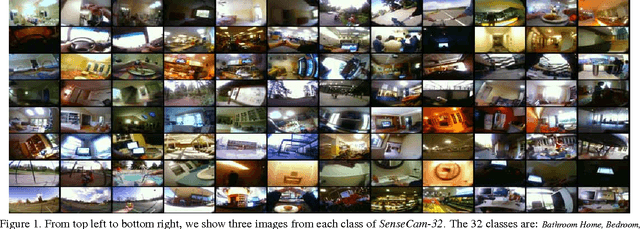

We introduce and we analyze a new dataset which resembles the input to biological vision systems much more than most previously published ones. Our analysis leaded to several important conclusions. First, it is possible to disambiguate over dozens of visual scenes (locations) encountered over the course of several weeks of a human life with accuracy of over 80%, and this opens up possibility for numerous novel vision applications, from early detection of dementia to everyday use of wearable camera streams for automatic reminders, and visual stream exchange. Second, our experimental results indicate that, generative models such as Latent Dirichlet Allocation or Counting Grids, are more suitable to such types of data, as they are more robust to overtraining and comfortable with images at low resolution, blurred and characterized by relatively random clutter and a mix of objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge