In Automation We Trust: Investigating the Role of Uncertainty in Active Learning Systems

Paper and Code

Apr 02, 2020

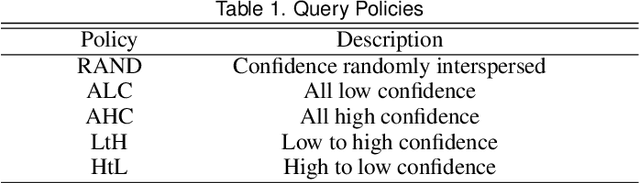

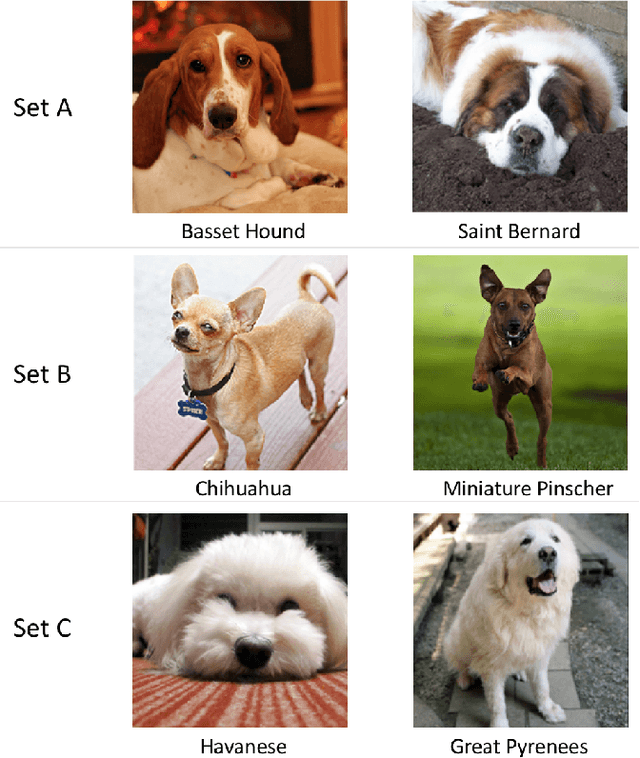

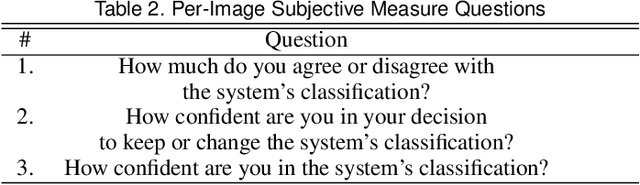

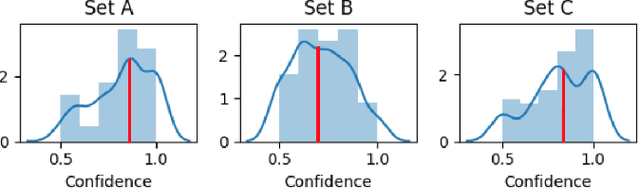

We investigate how different active learning (AL) query policies coupled with classification uncertainty visualizations affect analyst trust in automated classification systems. A current standard policy for AL is to query the oracle (e.g., the analyst) to refine labels for datapoints where the classifier has the highest uncertainty. This is an optimal policy for the automation system as it yields maximal information gain. However, model-centric policies neglect the effects of this uncertainty on the human component of the system and the consequent manner in which the human will interact with the system post-training. In this paper, we present an empirical study evaluating how AL query policies and visualizations lending transparency to classification influence trust in automated classification of image data. We found that query policy significantly influences an analyst's trust in an image classification system, and we use these results to propose a set of oracle query policies and visualizations for use during AL training phases that can influence analyst trust in classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge