Improving uplift model evaluation on RCT data

Paper and Code

Oct 05, 2022

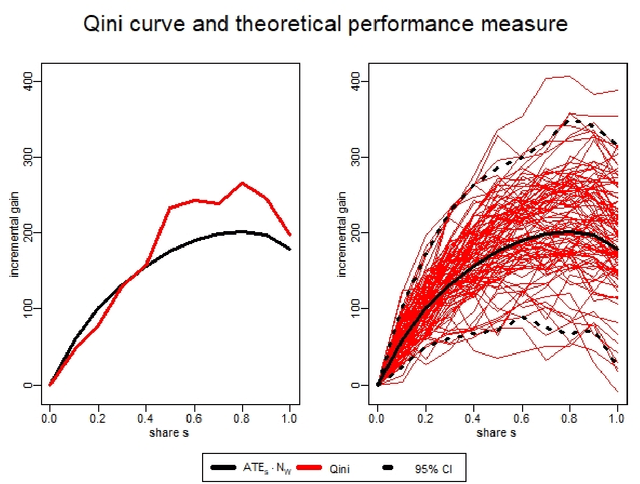

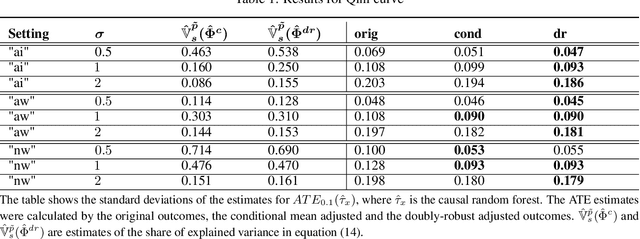

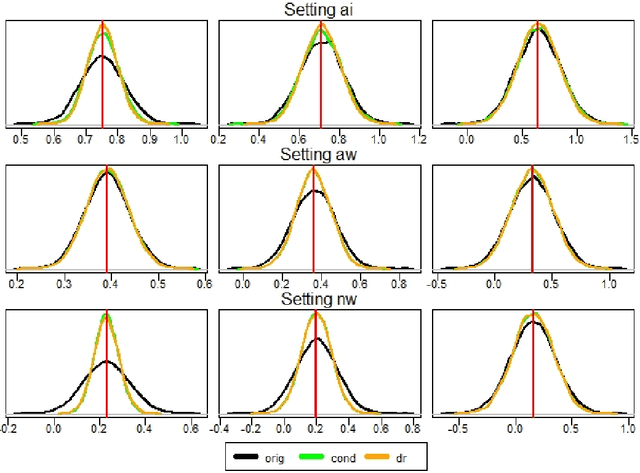

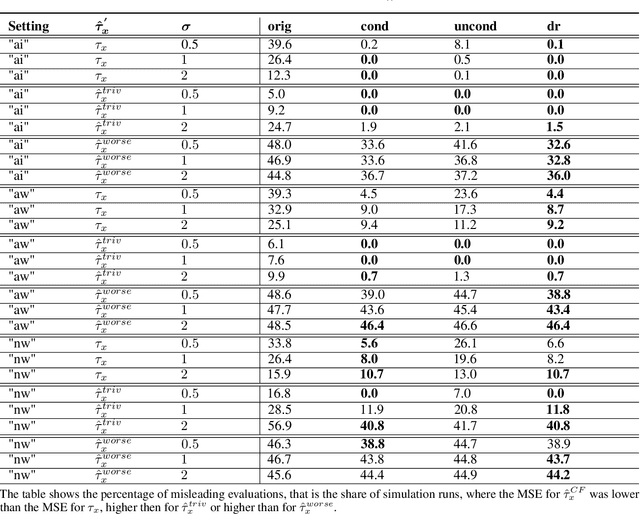

Estimating treatment effects is one of the most challenging and important tasks of data analysts. Traditional statistical methods aim to estimate average treatment effects over a population. While being highly useful, such average treatment effects do not help to decide which individuals profit most by the treatment. This is where uplift modeling becomes important. Uplift models help to select the right individuals for treatment, to maximize the overall treatment effect (uplift). A challenging problem in uplift modeling is to evaluate the models. Previous literature suggests methods like the Qini curve and the transformed outcome mean squared error. However, these metrics suffer from variance: Their evaluations are strongly affected by random noise in the data, which makes these evaluations to a certain degree arbitrary. In this paper, we analyze the variance of the uplift evaluation metrics, on randomized controlled trial data, in a sound statistical manner. We propose certain outcome adjustment methods, for which we prove theoretically and empirically, that they reduce the variance of the uplift evaluation metrics. Our statistical analysis and the proposed outcome adjustment methods are a step towards a better evaluation practice in uplift modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge