Improving Minimax Group Fairness in Sequential Recommendation

Paper and Code

Jan 30, 2025

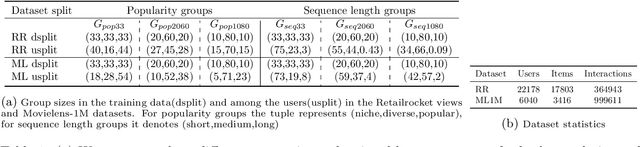

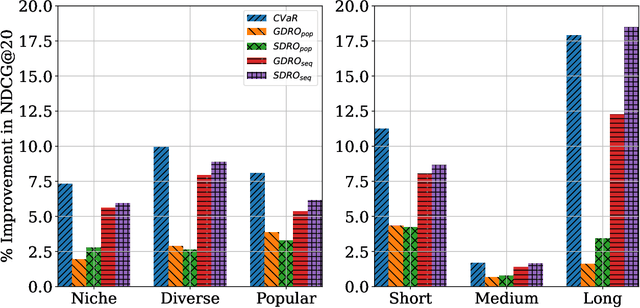

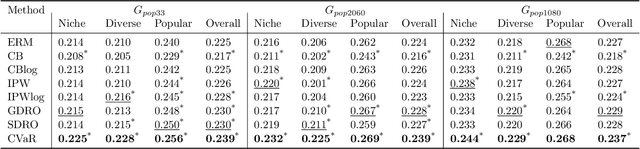

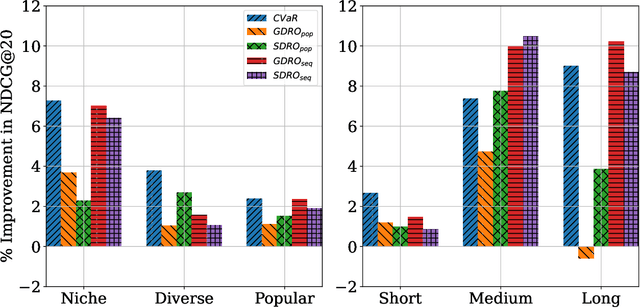

Training sequential recommenders such as SASRec with uniform sample weights achieves good overall performance but can fall short on specific user groups. One such example is popularity bias, where mainstream users receive better recommendations than niche content viewers. To improve recommendation quality across diverse user groups, we explore three Distributionally Robust Optimization(DRO) methods: Group DRO, Streaming DRO, and Conditional Value at Risk (CVaR) DRO. While Group and Streaming DRO rely on group annotations and struggle with users belonging to multiple groups, CVaR does not require such annotations and can naturally handle overlapping groups. In experiments on two real-world datasets, we show that the DRO methods outperform standard training, with CVaR delivering the best results. Additionally, we find that Group and Streaming DRO are sensitive to the choice of group used for loss computation. Our contributions include (i) a novel application of CVaR to recommenders, (ii) showing that the DRO methods improve group metrics as well as overall performance, and (iii) demonstrating CVaR's effectiveness in the practical scenario of intersecting user groups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge