Improving Generalization via Attribute Selection on Out-of-the-box Data

Paper and Code

Jul 26, 2019

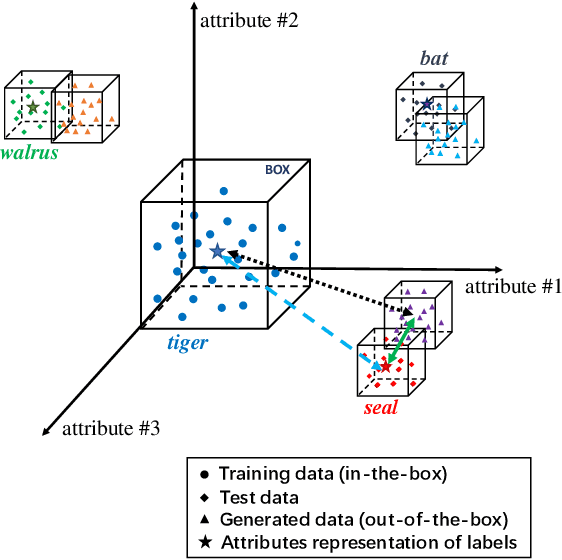

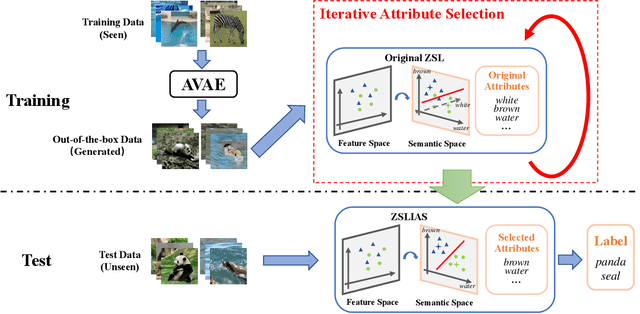

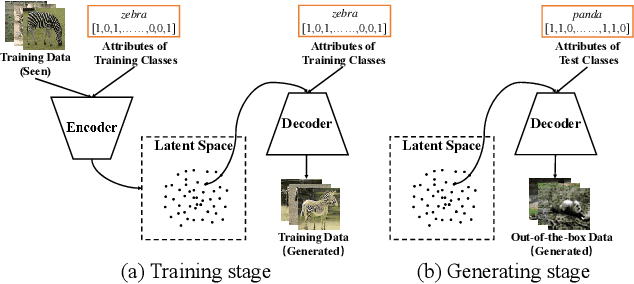

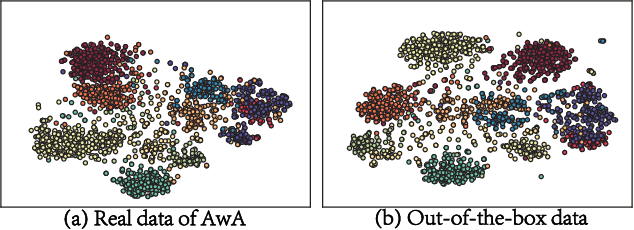

Zero-shot learning (ZSL) aims to recognize unseen objects (test classes) given some other seen objects (training classes), by sharing information of attributes between different objects. Attributes are artificially annotated for objects and are treated equally in recent ZSL tasks. However, some inferior attributes with poor predictability or poor discriminability may have negative impact on the ZSL system performance. This paper first derives a generalization error bound for ZSL tasks. Our theoretical analysis verifies that selecting key attributes set can improve the generalization performance of the original ZSL model which uses all the attributes. Unfortunately, previous attribute selection methods are conducted based on the seen data, their selected attributes have poor generalization capability to the unseen data, which is unavailable in training stage for ZSL tasks. Inspired by learning from pseudo relevance feedback, this paper introduces the out-of-the-box data, which is pseudo data generated by an attribute-guided generative model, to mimic the unseen data. After that, we present an iterative attribute selection (IAS) strategy which iteratively selects key attributes based on the out-of-the-box data. Since the distribution of the generated out-of-the-box data is similar to the test data, the key attributes selected by IAS can be effectively generalized to test data. Extensive experiments demonstrate that IAS can significantly improve existing attribute-based ZSL methods and achieve state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge