Improving Deep Ensembles by Estimating Confusion Matrices

Paper and Code

Mar 10, 2025

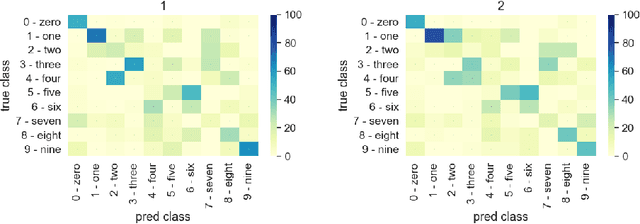

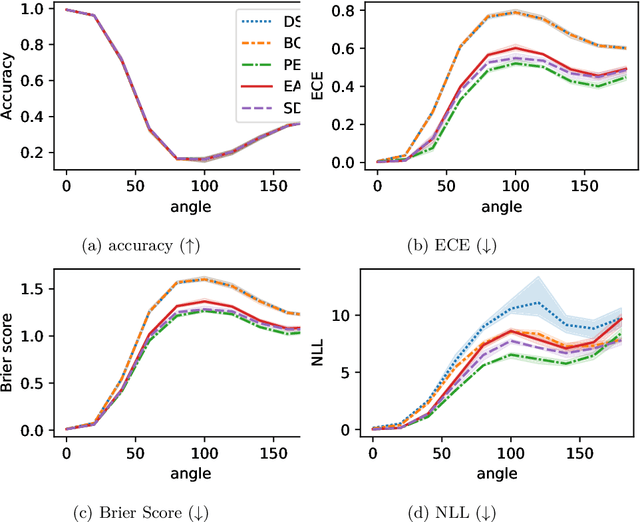

Ensembling in deep learning improves accuracy and calibration over single networks. The traditional aggregation approach, ensemble averaging, treats all individual networks equally by averaging their outputs. Inspired by crowdsourcing we propose an aggregation method called soft Dawid Skene for deep ensembles that estimates confusion matrices of ensemble members and weighs them according to their inferred performance. Soft Dawid Skene aggregates soft labels in contrast to hard labels often used in crowdsourcing. We empirically show the superiority of soft Dawid Skene in accuracy, calibration and out of distribution detection in comparison to ensemble averaging in extensive experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge