Improving Data Efficiency of Self-supervised Learning for Robotic Grasping

Paper and Code

Mar 01, 2019

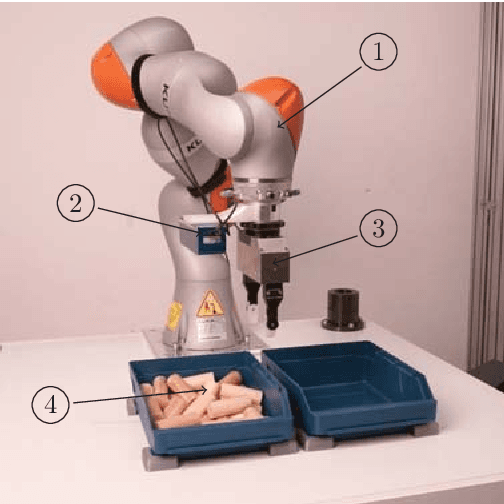

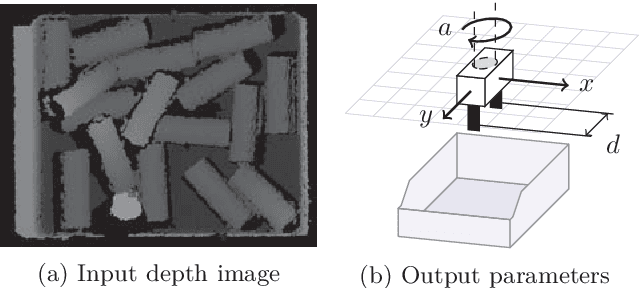

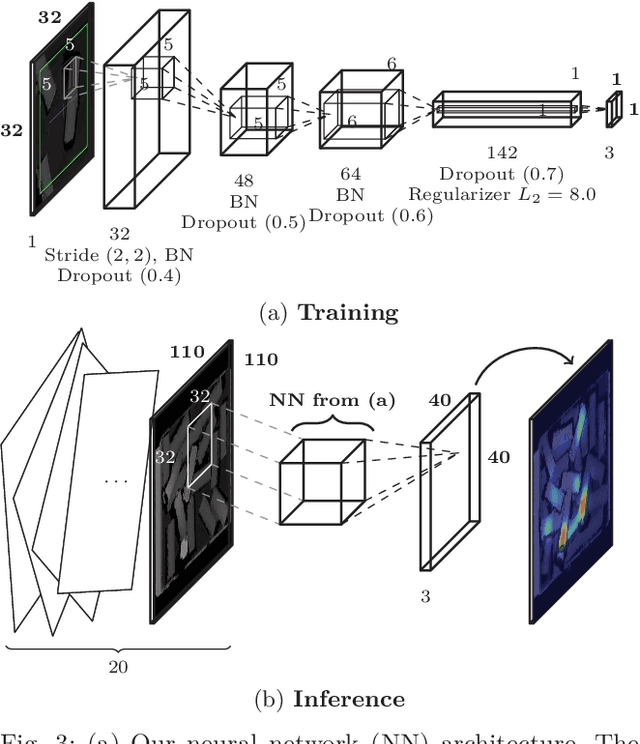

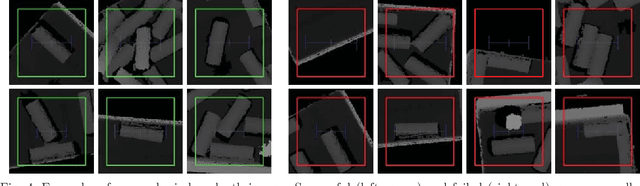

Given the task of learning robotic grasping solely based on a depth camera input and gripper force feedback, we derive a learning algorithm from an applied point of view to significantly reduce the amount of required training data. Major improvements in time and data efficiency are achieved by: Firstly, we exploit the geometric consistency between the undistorted depth images and the task space. Using a relative small, fully-convolutional neural network, we predict grasp and gripper parameters with great advantages in training as well as inference performance. Secondly, motivated by the small random grasp success rate of around 3%, the grasp space was explored in a systematic manner. The final system was learned with 23000 grasp attempts in around 60h, improving current solutions by an order of magnitude. For typical bin picking scenarios, we measured a grasp success rate of 96.6%. Further experiments showed that the system is able to generalize and transfer knowledge to novel objects and environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge