Implicit Contextual Integrity in Online Social Networks

Paper and Code

Jul 06, 2015

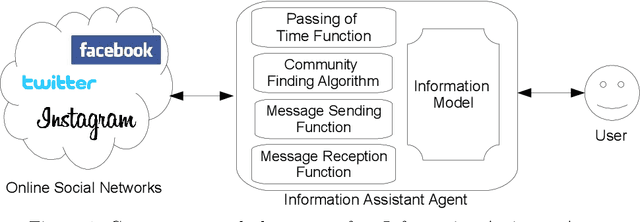

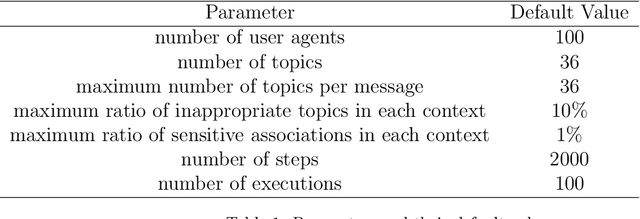

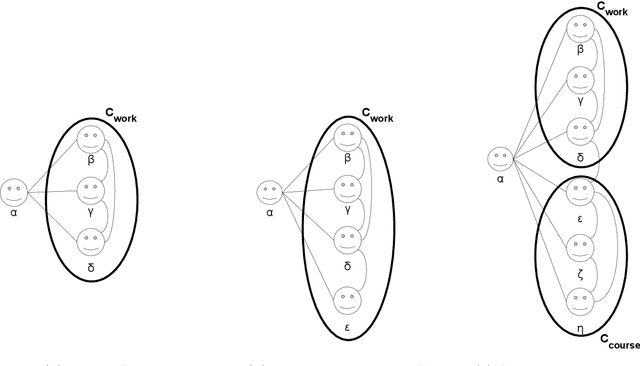

Many real incidents demonstrate that users of Online Social Networks need mechanisms that help them manage their interactions by increasing the awareness of the different contexts that coexist in Online Social Networks and preventing them from exchanging inappropriate information in those contexts or disseminating sensitive information from some contexts to others. Contextual integrity is a privacy theory that conceptualises the appropriateness of information sharing based on the contexts in which this information is to be shared. Computational models of Contextual Integrity assume the existence of well-defined contexts, in which individuals enact pre-defined roles and information sharing is governed by an explicit set of norms. However, contexts in Online Social Networks are known to be implicit, unknown a priori and ever changing; users relationships are constantly evolving; and the information sharing norms are implicit. This makes current Contextual Integrity models not suitable for Online Social Networks. In this paper, we propose the first computational model of Implicit Contextual Integrity, presenting an information model and an Information Assistant Agent that uses the information model to learn implicit contexts, relationships and the information sharing norms to help users avoid inappropriate information exchanges and undesired information disseminations. Through an experimental evaluation, we validate the properties of Information Assistant Agents, which are shown to: infer the information sharing norms even if a small proportion of the users follow the norms and in presence of malicious users; help reduce the exchange of inappropriate information and the dissemination of sensitive information with only a partial view of the system and the information received and sent by their users; and minimise the burden to the users in terms of raising unnecessary alerts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge