Implications of Human Irrationality for Reinforcement Learning

Paper and Code

Jun 07, 2020

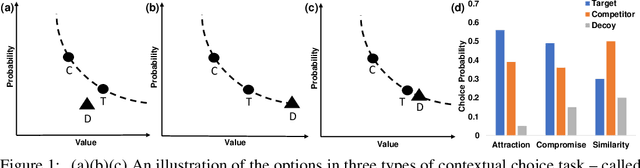

Recent work in the behavioural sciences has begun to overturn the long-held belief that human decision making is irrational, suboptimal and subject to biases. This turn to the rational suggests that human decision making may be a better source of ideas for constraining how machine learning problems are defined than would otherwise be the case. One promising idea concerns human decision making that is dependent on apparently irrelevant aspects of the choice context. Previous work has shown that by taking into account choice context and making relational observations, people can maximize expected value. Other work has shown that Partially observable Markov decision processes (POMDPs) are a useful way to formulate human-like decision problems. Here, we propose a novel POMDP model for contextual choice tasks and show that, despite the apparent irrationalities, a reinforcement learner can take advantage of the way that humans make decisions. We suggest that human irrationalities may offer a productive source of inspiration for improving the design of AI architectures and machine learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge