Identifying Semantically Difficult Samples to Improve Text Classification

Paper and Code

Feb 13, 2023

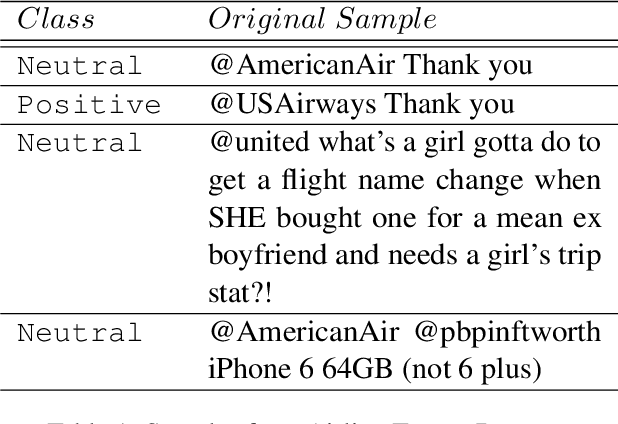

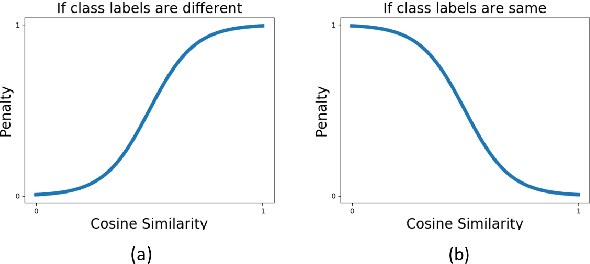

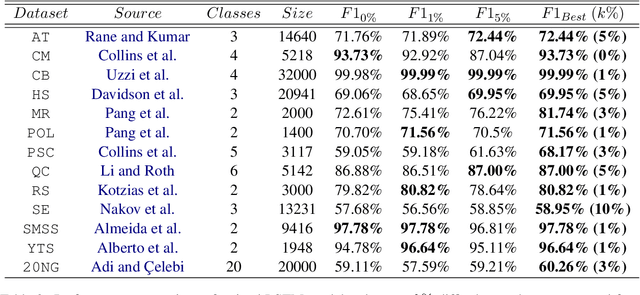

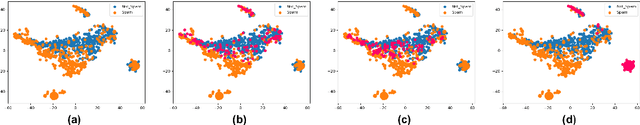

In this paper, we investigate the effect of addressing difficult samples from a given text dataset on the downstream text classification task. We define difficult samples as being non-obvious cases for text classification by analysing them in the semantic embedding space; specifically - (i) semantically similar samples that belong to different classes and (ii) semantically dissimilar samples that belong to the same class. We propose a penalty function to measure the overall difficulty score of every sample in the dataset. We conduct exhaustive experiments on 13 standard datasets to show a consistent improvement of up to 9% and discuss qualitative results to show effectiveness of our approach in identifying difficult samples for a text classification model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge