Identifying noisy labels with a transductive semi-supervised leave-one-out filter

Paper and Code

Sep 24, 2020

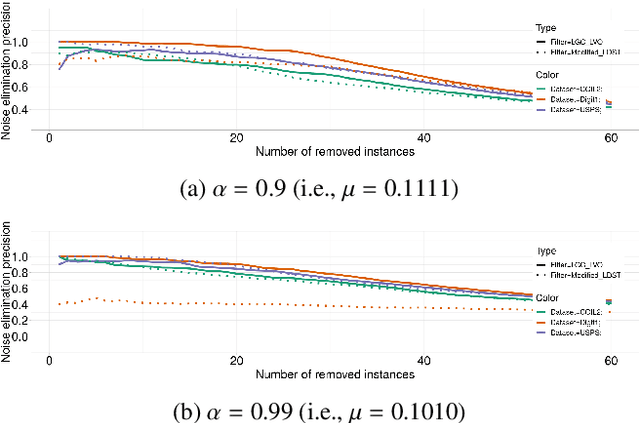

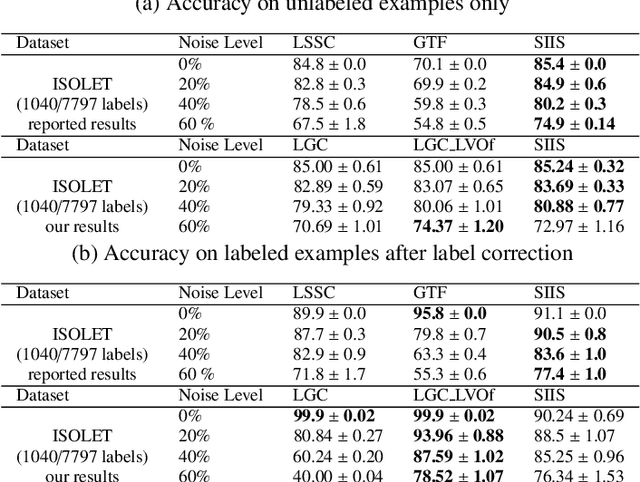

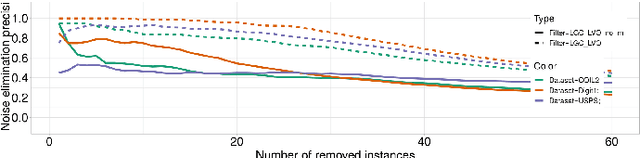

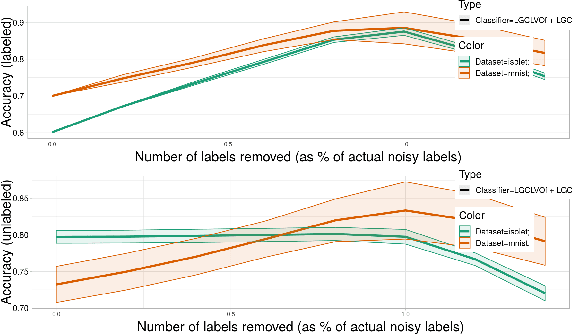

Obtaining data with meaningful labels is often costly and error-prone. In this situation, semi-supervised learning (SSL) approaches are interesting, as they leverage assumptions about the unlabeled data to make up for the limited amount of labels. However, in real-world situations, we cannot assume that the labeling process is infallible, and the accuracy of many SSL classifiers decreases significantly in the presence of label noise. In this work, we introduce the LGC_LVOF, a leave-one-out filtering approach based on the Local and Global Consistency (LGC) algorithm. Our method aims to detect and remove wrong labels, and thus can be used as a preprocessing step to any SSL classifier. Given the propagation matrix, detecting noisy labels takes O(cl) per step, with c the number of classes and l the number of labels. Moreover, one does not need to compute the whole propagation matrix, but only an $l$ by $l$ submatrix corresponding to interactions between labeled instances. As a result, our approach is best suited to datasets with a large amount of unlabeled data but not many labels. Results are provided for a number of datasets, including MNIST and ISOLET. LGCLVOF appears to be equally or more precise than the adapted gradient-based filter. We show that the best-case accuracy of the embedding of LGCLVOF into LGC yields performance comparable to the best-case of $\ell_1$-based classifiers designed to be robust to label noise. We provide a heuristic to choose the number of removed instances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge