i-DenseNets

Paper and Code

Oct 23, 2020

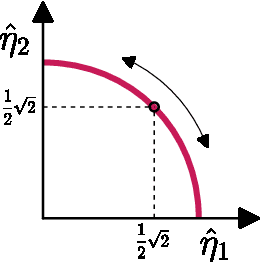

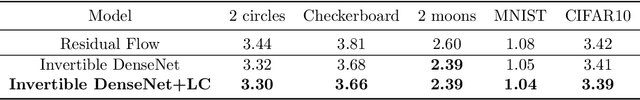

We introduce Invertible Dense Networks (i-DenseNets), a more parameter efficient alternative to Residual Flows. The method relies on an analysis of the Lipschitz continuity of the concatenation in DenseNets, where we enforce the invertibility of the network by satisfying the Lipschitz constraint. Additionally, we extend this method by proposing a learnable concatenation, which not only improves the model performance but also indicates the importance of the concatenated representation. We demonstrate the performance of i-DenseNets and Residual Flows on toy, MNIST, and CIFAR10 data. Both i-DenseNets outperform Residual Flows evaluated in negative log-likelihood, on all considered datasets under an equal parameter budget.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge