Hyperbolic Convolutional Neural Networks

Paper and Code

Aug 29, 2023

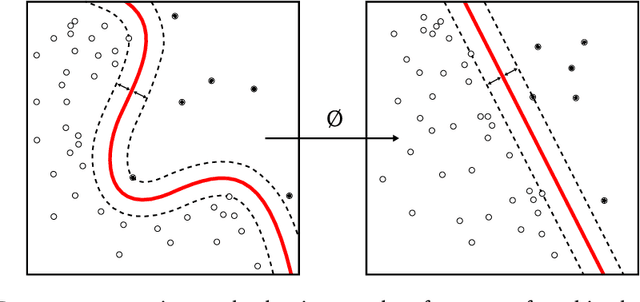

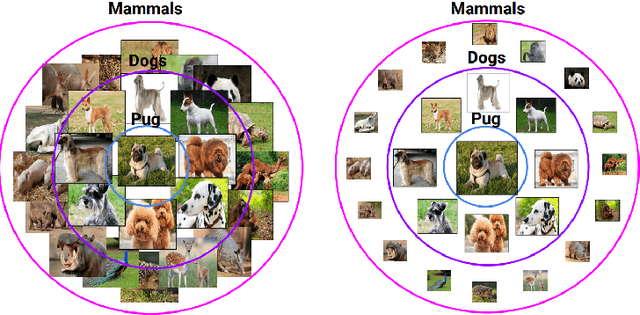

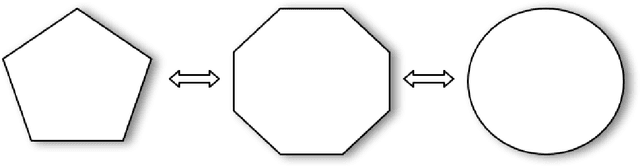

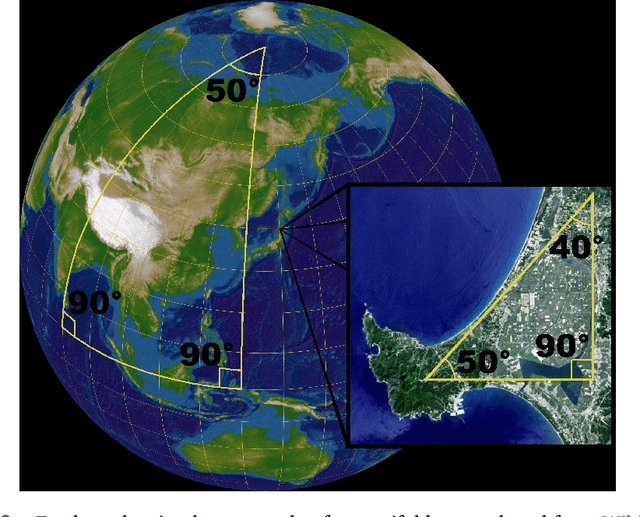

Deep Learning is mostly responsible for the surge of interest in Artificial Intelligence in the last decade. So far, deep learning researchers have been particularly successful in the domain of image processing, where Convolutional Neural Networks are used. Although excelling at image classification, Convolutional Neural Networks are quite naive in that no inductive bias is set on the embedding space for images. Similar flaws are also exhibited by another type of Convolutional Networks - Graph Convolutional Neural Networks. However, using non-Euclidean space for embedding data might result in more robust and explainable models. One example of such a non-Euclidean space is hyperbolic space. Hyperbolic spaces are particularly useful due to their ability to fit more data in a low-dimensional space and tree-likeliness properties. These attractive properties have been previously used in multiple papers which indicated that they are beneficial for building hierarchical embeddings using shallow models and, recently, using MLPs and RNNs. However, no papers have yet suggested a general approach to using Hyperbolic Convolutional Neural Networks for structured data processing, although these are the most common examples of data used. Therefore, the goal of this work is to devise a general recipe for building Hyperbolic Convolutional Neural Networks. We hypothesize that ability of hyperbolic space to capture hierarchy in the data would lead to better performance. This ability should be particularly useful in cases where data has a tree-like structure. Since this is the case for many existing datasets \citep{wordnet, imagenet, fb15k}, we argue that such a model would be advantageous both in terms of applications and future research prospects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge