Human Pose Estimation from Sparse Inertial Measurements through Recurrent Graph Convolution

Paper and Code

Jul 23, 2021

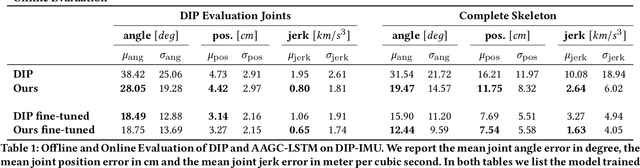

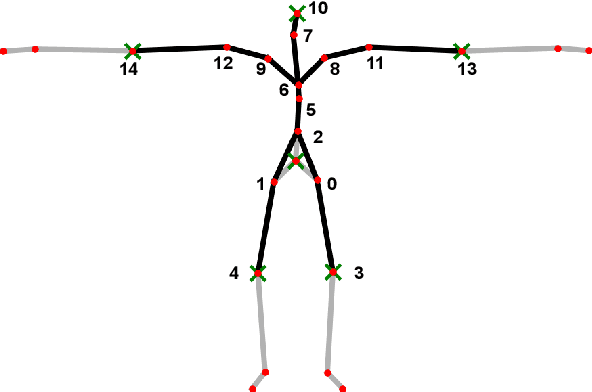

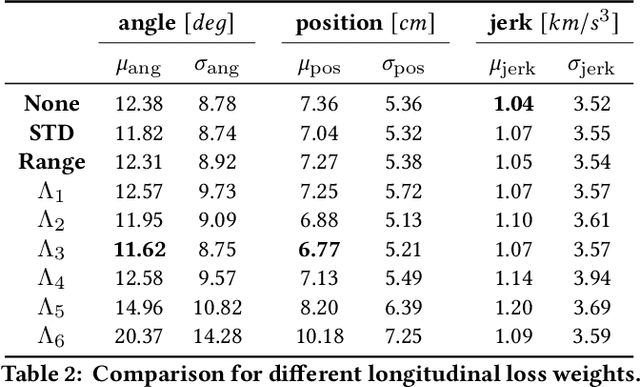

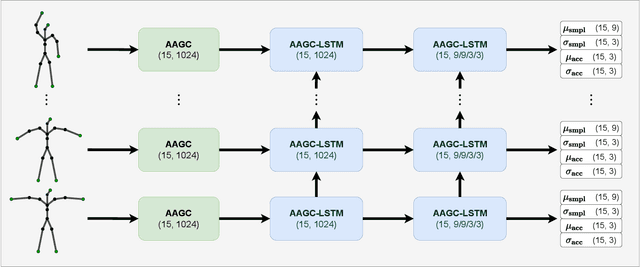

We propose the adjacency adaptive graph convolutional long-short term memory network (AAGC-LSTM) for human pose estimation from sparse inertial measurements, obtained from only 6 measurement units. The AAGC-LSTM combines both spatial and temporal dependency in a single network operation. This is made possible by equipping graph convolutions with adjacency adaptivity, which also allows for learning unknown dependencies of the human body joints. To further boost accuracy, we propose longitudinal loss weighting to consider natural movement patterns, as well as body-aware contralateral data augmentation. By combining these contributions, we are able to utilize the inherent graph nature of the human body, and can thus outperform the state of the art for human pose estimation from sparse inertial measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge