How accurate are the time delay estimates in gravitational lensing?

Paper and Code

May 01, 2006

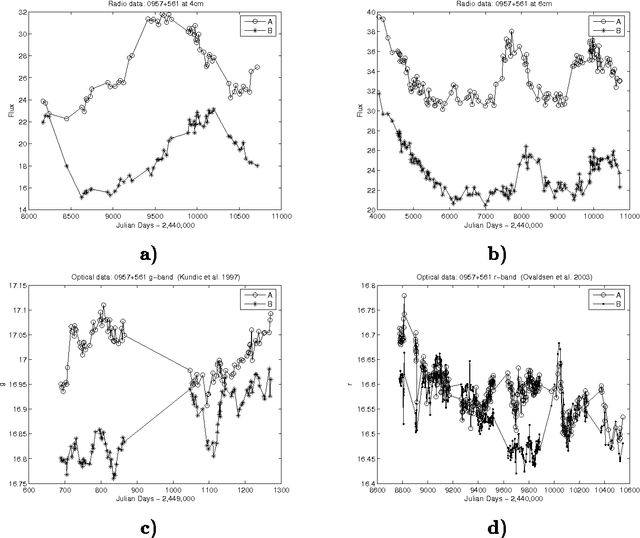

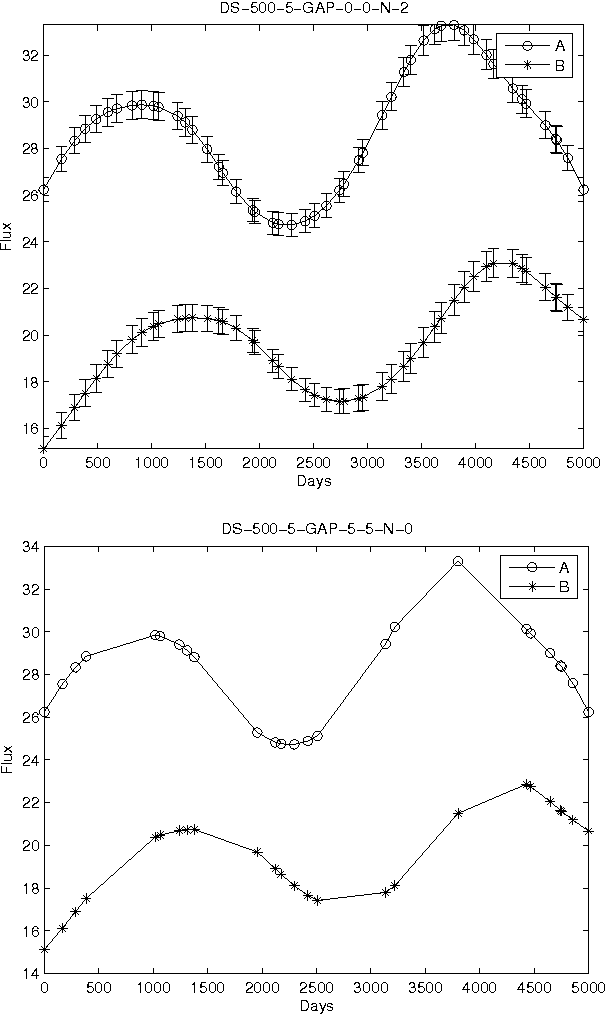

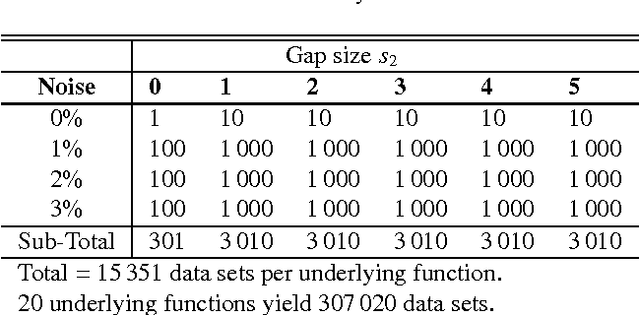

We present a novel approach to estimate the time delay between light curves of multiple images in a gravitationally lensed system, based on Kernel methods in the context of machine learning. We perform various experiments with artificially generated irregularly-sampled data sets to study the effect of the various levels of noise and the presence of gaps of various size in the monitoring data. We compare the performance of our method with various other popular methods of estimating the time delay and conclude, from experiments with artificial data, that our method is least vulnerable to missing data and irregular sampling, within reasonable bounds of Gaussian noise. Thereafter, we use our method to determine the time delays between the two images of quasar Q0957+561 from radio monitoring data at 4 cm and 6 cm, and conclude that if only the observations at epochs common to both wavelengths are used, the time delay gives consistent estimates, which can be combined to yield 408\pm 12 days. The full 6 cm dataset, which covers a longer monitoring period, yields a value which is 10% larger, but this can be attributed to differences in sampling and missing data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge