High-dimensional Ordinary Least-squares Projection for Screening Variables

Paper and Code

Jun 05, 2015

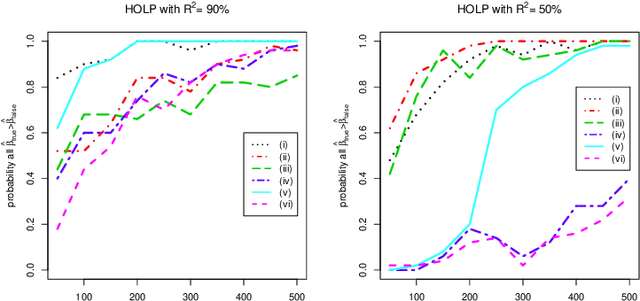

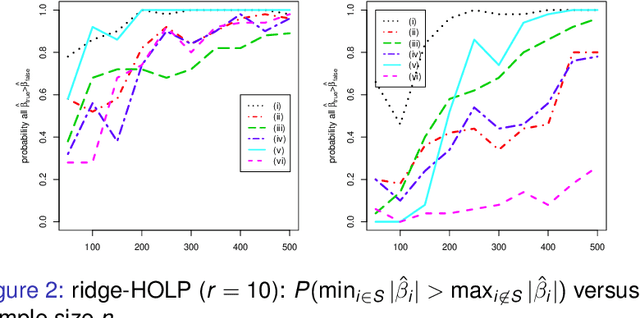

Variable selection is a challenging issue in statistical applications when the number of predictors $p$ far exceeds the number of observations $n$. In this ultra-high dimensional setting, the sure independence screening (SIS) procedure was introduced to significantly reduce the dimensionality by preserving the true model with overwhelming probability, before a refined second stage analysis. However, the aforementioned sure screening property strongly relies on the assumption that the important variables in the model have large marginal correlations with the response, which rarely holds in reality. To overcome this, we propose a novel and simple screening technique called the high-dimensional ordinary least-squares projection (HOLP). We show that HOLP possesses the sure screening property and gives consistent variable selection without the strong correlation assumption, and has a low computational complexity. A ridge type HOLP procedure is also discussed. Simulation study shows that HOLP performs competitively compared to many other marginal correlation based methods. An application to a mammalian eye disease data illustrates the attractiveness of HOLP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge