Hierarchical Reinforcement Learning with Adversarially Guided Subgoals

Paper and Code

Jan 31, 2022

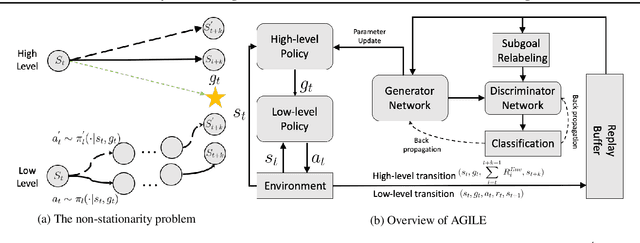

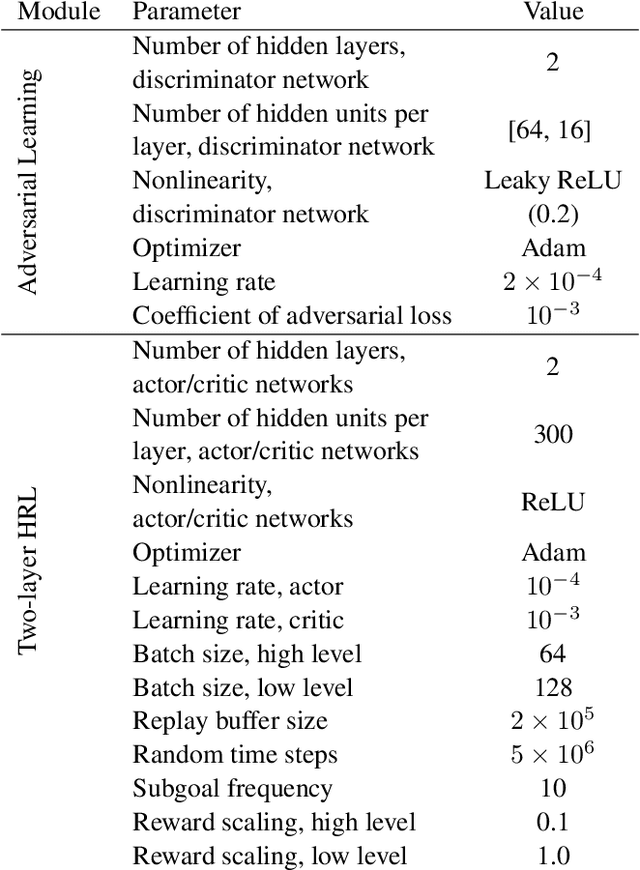

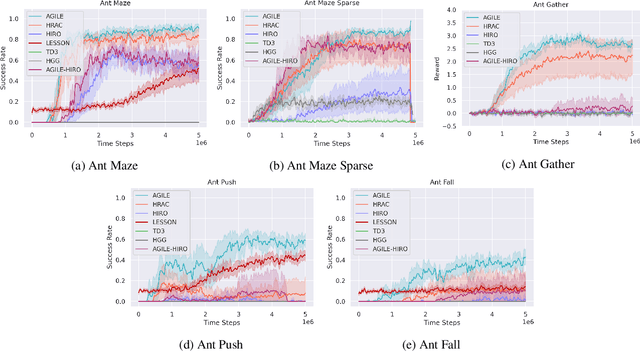

Hierarchical reinforcement learning (HRL) proposes to solve difficult tasks by performing decision-making and control at successively higher levels of temporal abstraction. However, off-policy HRL often suffers from the problem of non-stationary high-level policy since the low-level policy is constantly changing. In this paper, we propose a novel HRL approach for mitigating the non-stationarity by adversarially enforcing the high-level policy to generate subgoals compatible with the current instantiation of the low-level policy. In practice, the adversarial learning is implemented by training a simple discriminator network concurrently with the high-level policy which determines the compatibility level of subgoals. Experiments with state-of-the-art algorithms show that our approach improves both HRL learning efficiency and overall performance in various challenging continuous control tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge