Hierarchical Learning Framework for Whole-Body Model Predictive Control of a Real Humanoid Robot

Paper and Code

Sep 13, 2024

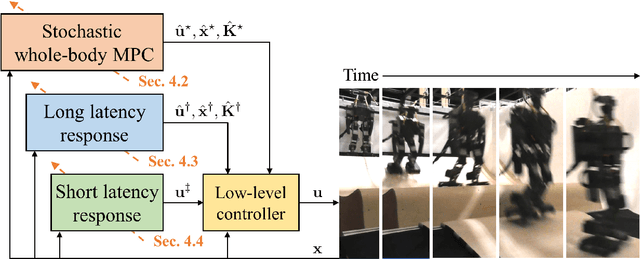

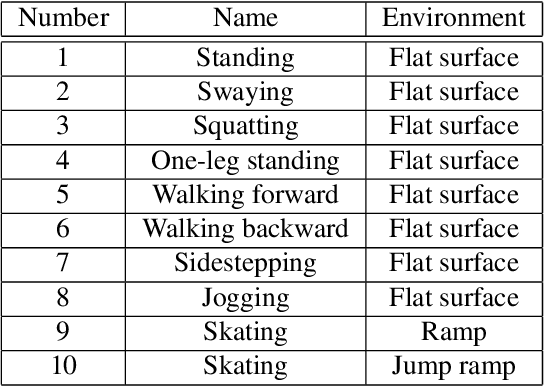

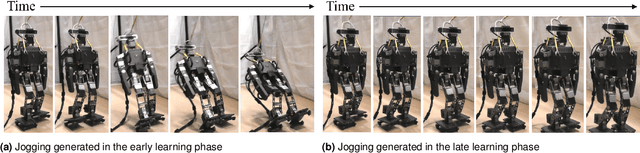

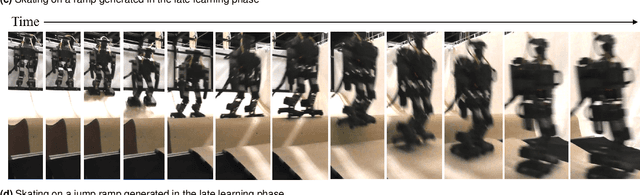

The simulation-to-real gap problem and the high computational burden of whole-body Model Predictive Control (whole-body MPC) continue to present challenges in generating a wide variety of movements using whole-body MPC for real humanoid robots. This paper presents a biologically-inspired hierarchical learning framework as a potential solution to the aforementioned problems. The proposed three-layer hierarchical framework enables the generation of multi-contact, dynamic behaviours even with low-frequency policy updates of whole-body MPC. The upper layer is responsible for learning an accurate dynamics model with the objective of reducing the discrepancy between the analytical model and the real system. This enables the computation of effective control policies using whole-body MPC. Subsequently, the middle and lower layers are tasked with learning additional policies to generate high-frequency control inputs. In order to learn an accurate dynamics model in the upper layer, an augmented model using a deep residual network is trained by model-based reinforcement learning with stochastic whole-body MPC. The proposed framework was evaluated in 10 distinct motion learning scenarios, including jogging on a flat surface and skating on curved surfaces. The results demonstrate that a wide variety of motions can be successfully generated on a real humanoid robot using whole-body MPC through learning with the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge