Heuristic Stopping Rules For Technology-Assisted Review

Paper and Code

Jun 18, 2021

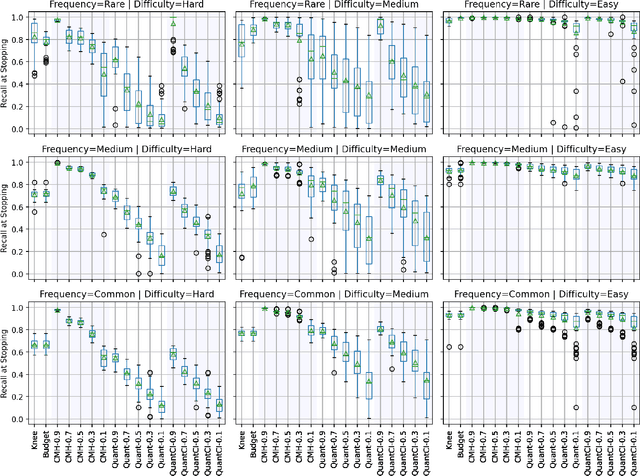

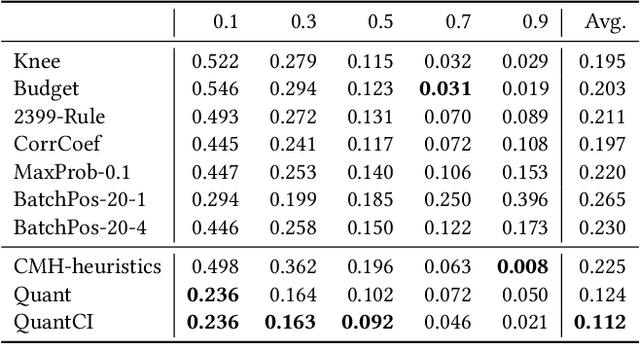

Technology-assisted review (TAR) refers to human-in-the-loop active learning workflows for finding relevant documents in large collections. These workflows often must meet a target for the proportion of relevant documents found (i.e. recall) while also holding down costs. A variety of heuristic stopping rules have been suggested for striking this tradeoff in particular settings, but none have been tested against a range of recall targets and tasks. We propose two new heuristic stopping rules, Quant and QuantCI based on model-based estimation techniques from survey research. We compare them against a range of proposed heuristics and find they are accurate at hitting a range of recall targets while substantially reducing review costs.

* 10 pages, 2 figures. Accepted at DocEng 21

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge