Heterotic String Model Building with Monad Bundles and Reinforcement Learning

Paper and Code

Aug 16, 2021

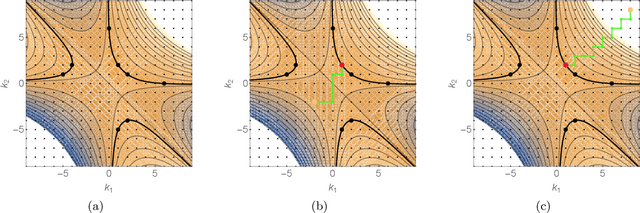

We use reinforcement learning as a means of constructing string compactifications with prescribed properties. Specifically, we study heterotic SO(10) GUT models on Calabi-Yau three-folds with monad bundles, in search of phenomenologically promising examples. Due to the vast number of bundles and the sparseness of viable choices, methods based on systematic scanning are not suitable for this class of models. By focusing on two specific manifolds with Picard numbers two and three, we show that reinforcement learning can be used successfully to explore monad bundles. Training can be accomplished with minimal computing resources and leads to highly efficient policy networks. They produce phenomenologically promising states for nearly 100% of episodes and within a small number of steps. In this way, hundreds of new candidate standard models are found.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge