Heteroscedastic Calibration of Uncertainty Estimators in Deep Learning

Paper and Code

Oct 30, 2019

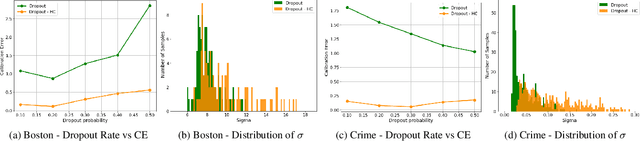

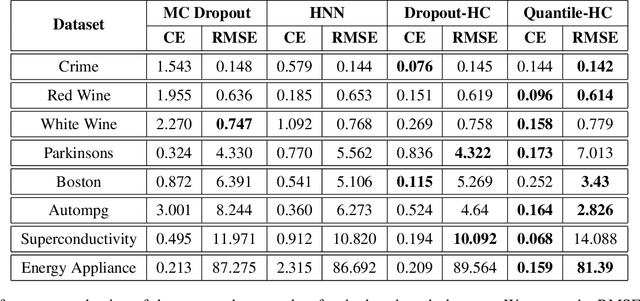

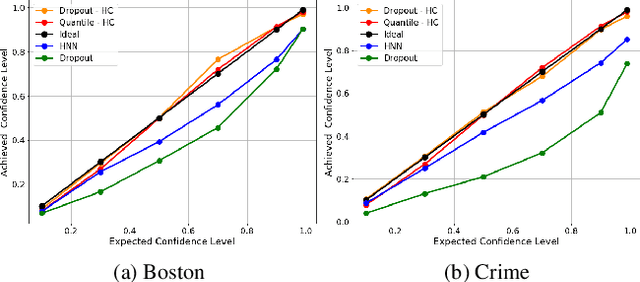

The role of uncertainty quantification (UQ) in deep learning has become crucial with growing use of predictive models in high-risk applications. Though a large class of methods exists for measuring deep uncertainties, in practice, the resulting estimates are found to be poorly calibrated, thus making it challenging to translate them into actionable insights. A common workaround is to utilize a separate recalibration step, which adjusts the estimates to compensate for the miscalibration. Instead, we propose to repurpose the heteroscedastic regression objective as a surrogate for calibration and enable any existing uncertainty estimator to be inherently calibrated. In addition to eliminating the need for recalibration, this also regularizes the training process. Using regression experiments, we demonstrate the effectiveness of the proposed heteroscedastic calibration with two popular uncertainty estimators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge