Hermitian Symmetric Spaces for Graph Embeddings

Paper and Code

May 11, 2021

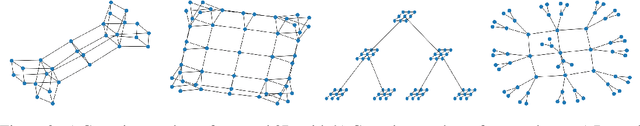

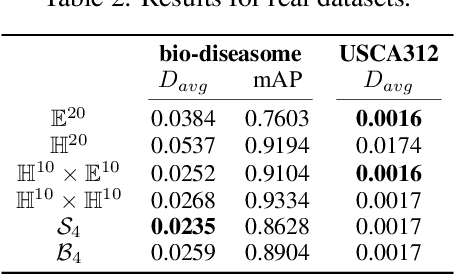

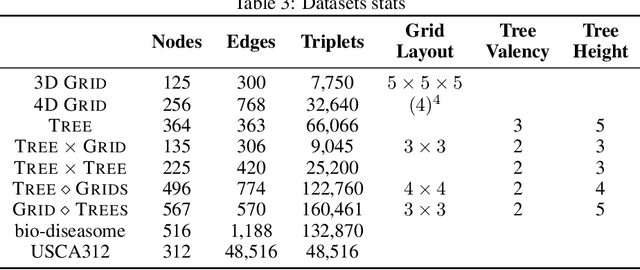

Learning faithful graph representations as sets of vertex embeddings has become a fundamental intermediary step in a wide range of machine learning applications. The quality of the embeddings is usually determined by how well the geometry of the target space matches the structure of the data. In this work we learn continuous representations of graphs in spaces of symmetric matrices over C. These spaces offer a rich geometry that simultaneously admits hyperbolic and Euclidean subspaces, and are amenable to analysis and explicit computations. We implement an efficient method to learn embeddings and compute distances, and develop the tools to operate with such spaces. The proposed models are able to automatically adapt to very dissimilar arrangements without any apriori estimates of graph features. On various datasets with very diverse structural properties and reconstruction measures our model ties the results of competitive baselines for geometrically pure graphs and outperforms them for graphs with mixed geometric features, showcasing the versatility of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge