HAWKEYE: Adversarial Example Detector for Deep Neural Networks

Paper and Code

Sep 22, 2019

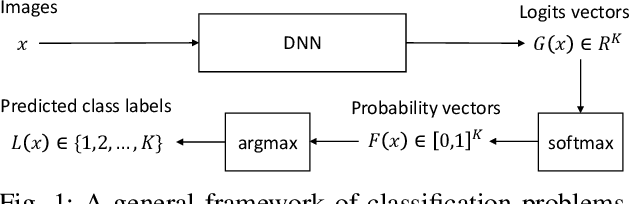

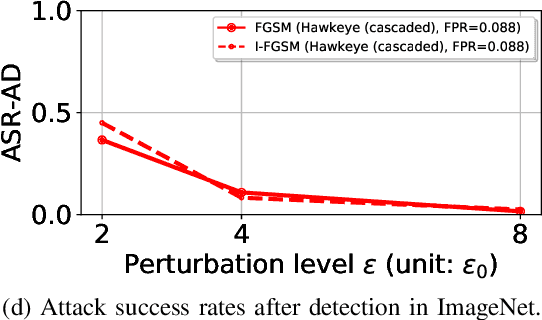

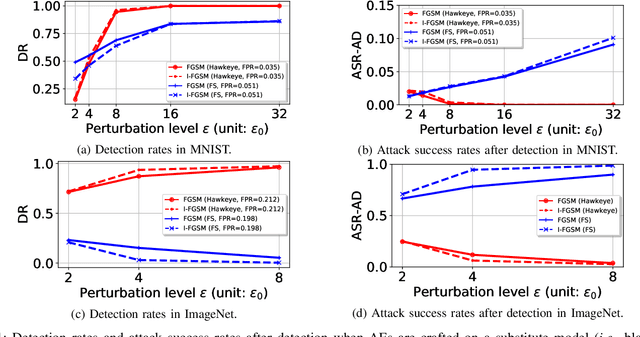

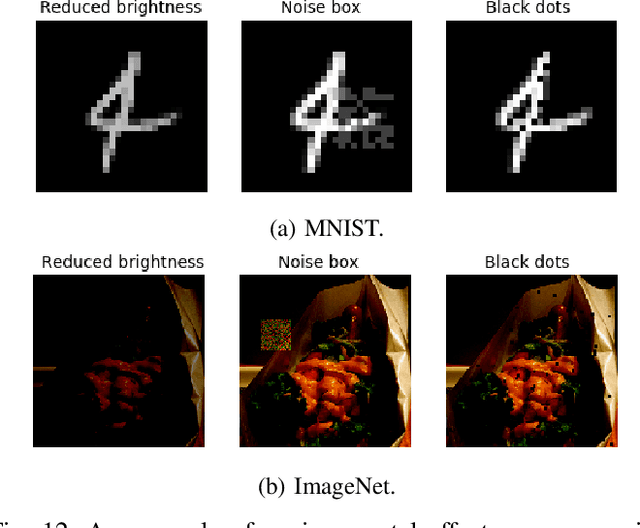

Adversarial examples (AEs) are images that can mislead deep neural network (DNN) classifiers via introducing slight perturbations into original images. Recent work has shown that detecting AEs can be more effective against AEs than preventing them from being generated. However, the state-of-the-art AE detection still shows a high false positive rate, thereby rejecting a considerable amount of normal images. To address this issue, we propose HAWKEYE, which is a separate neural network that analyzes the output layer of the DNN, and detects AEs. HAWKEYE's AE detector utilizes a quantized version of an input image as a reference, and is trained to distinguish the variation characteristics of the DNN output on an input image from the DNN output on its reference image. We also show that cascading our AE detectors that are trained for different quantization step sizes can drastically reduce a false positive rate, while keeping a detection rate high.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge