Have you forgotten? A method to assess if machine learning models have forgotten data

Paper and Code

Apr 21, 2020

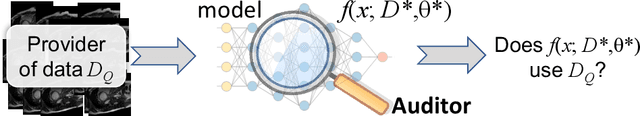

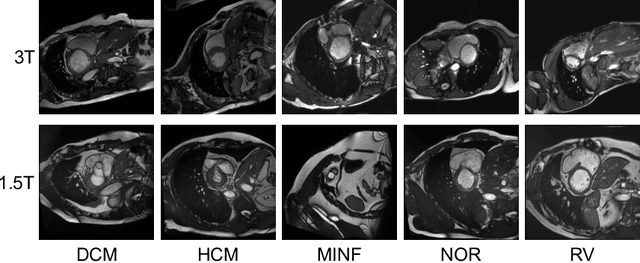

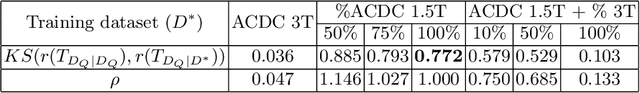

In the era of deep learning, aggregation of data from several sources is considered as a common approach to ensuring data diversity. Let us consider a scenario where several providers contribute data to a consortium for the joint development of a classification model (hereafter the target model), but, now one of the providers decides to leave. The provider requests that their data (hereafter the query dataset) be removed from the databases but also that the model `forgets' their data. In this paper, for the first time, we want to address the challenging question of whether data have been forgotten by a model. We assume knowledge of the query dataset and the distribution of a model's output activations. We establish statistical methods that compare the outputs of the target with outputs of models trained with different datasets. We evaluate our approach on several benchmark datasets (MNIST, CIFAR-10 and SVHN) and on a cardiac pathology diagnosis task using data from the Automated Cardiac Diagnosis Challenge (ACDC). We hope to encourage investigations on what information a model retains and inspire extensions in more complex settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge