Harnessing Reinforcement Learning for Neural Motion Planning

Paper and Code

Jun 01, 2019

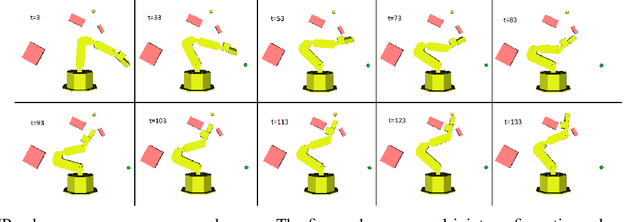

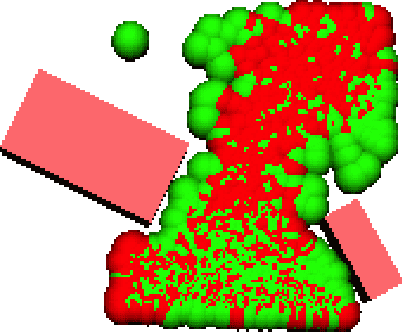

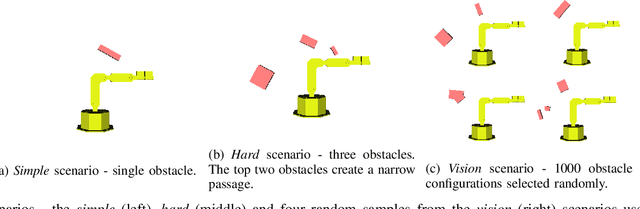

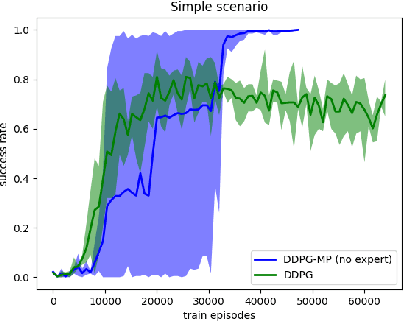

Motion planning is an essential component in most of today's robotic applications. In this work, we consider the learning setting, where a set of solved motion planning problems is used to improve the efficiency of motion planning on different, yet similar problems. This setting is important in applications with rapidly changing environments such as in e-commerce, among others. We investigate a general deep learning based approach, where a neural network is trained to map an image of the domain, the current robot state, and a goal robot state to the next robot state in the plan. We focus on the learning algorithm, and compare supervised learning methods with reinforcement learning (RL) algorithms. We first establish that supervised learning approaches are inferior in their accuracy due to insufficient data on the boundary of the obstacles, an issue that RL methods mitigate by actively exploring the domain. We then propose a modification of the popular DDPG RL algorithm that is tailored to motion planning domains, by exploiting the known model in the problem and the set of solved plans in the data. We show that our algorithm, dubbed DDPG-MP, significantly improves the accuracy of the learned motion planning policy. Finally, we show that given enough training data, our method can plan significantly faster on novel domains than off-the-shelf sampling based motion planners. Results of our experiments are shown in https://youtu.be/wHQ4Y4mBRb8.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge