Haptic Feedback Relocation from the Fingertips to the Wrist for Two-Finger Manipulation in Virtual Reality

Paper and Code

Sep 15, 2022

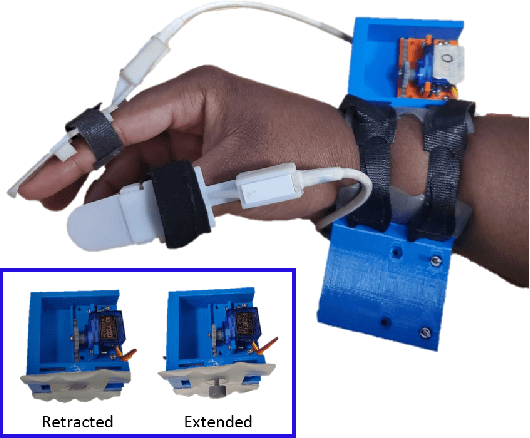

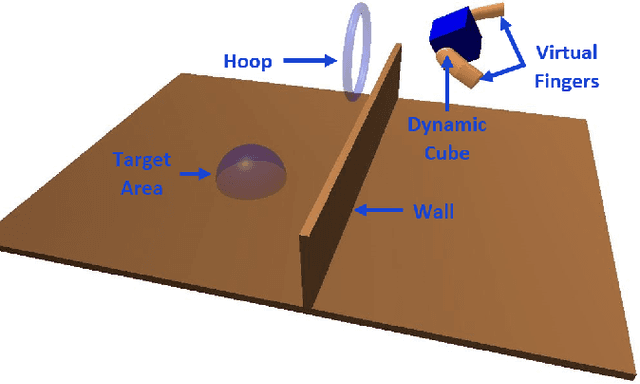

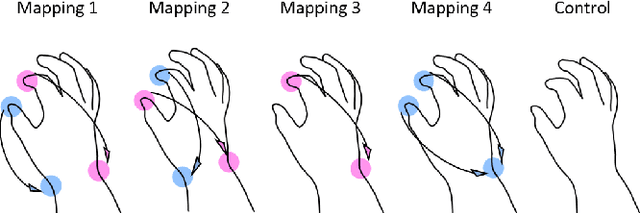

Relocation of haptic feedback from the fingertips to the wrist has been considered as a way to enable haptic interaction with mixed reality virtual environments while leaving the fingers free for other tasks. We present a pair of wrist-worn tactile haptic devices and a virtual environment to study how various mappings between fingers and tactors affect task performance. The haptic feedback rendered to the wrist reflects the interaction forces occurring between a virtual object and virtual avatars controlled by the index finger and thumb. We performed a user study comparing four different finger-to-tactor haptic feedback mappings and one no-feedback condition as a control. We evaluated users' ability to perform a simple pick-and-place task via the metrics of task completion time, path length of the fingers and virtual cube, and magnitudes of normal and shear forces at the fingertips. We found that multiple mappings were effective, and there was a greater impact when visual cues were limited. We discuss the limitations of our approach and describe next steps toward multi-degree-of-freedom haptic rendering for wrist-worn devices to improve task performance in virtual environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge