Guiding CNNs towards Relevant Concepts by Multi-task and Adversarial Learning

Paper and Code

Aug 04, 2020

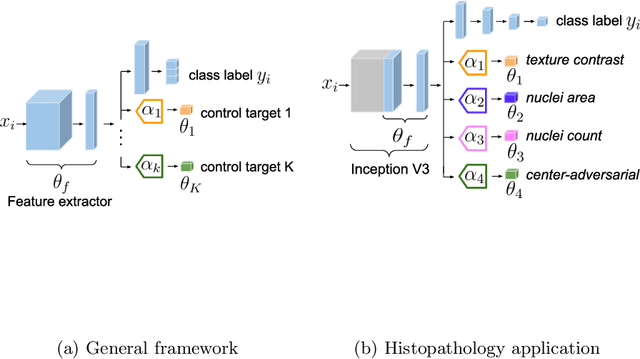

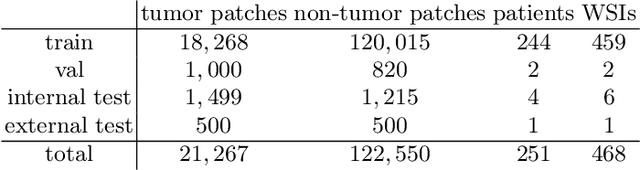

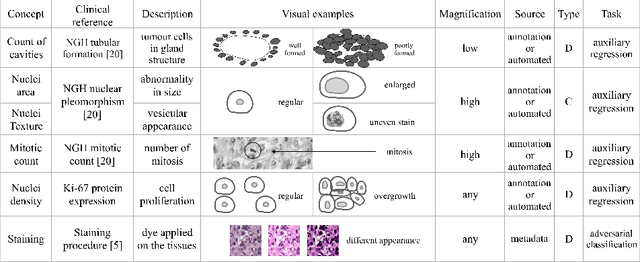

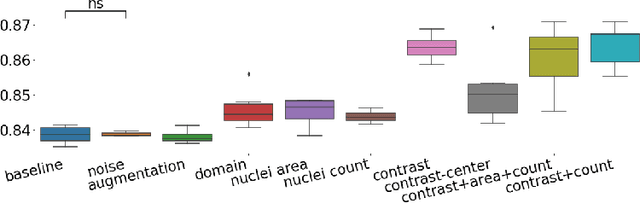

The opaqueness of deep learning limits its deployment in critical application scenarios such as cancer grading in medical images. In this paper, a framework for guiding CNN training is built on top of successful existing techniques of hard parameter sharing, with the main goal of explicitly introducing expert knowledge in the training objectives. The learning process is guided by identifying concepts that are relevant or misleading for the task. Relevant concepts are encouraged to appear in the representation through multi-task learning. Undesired and misleading concepts are discouraged by a gradient reversal operation. In this way, a shift in the deep representations can be corrected to match the clinicians' assumptions. The application on breast lymph nodes histopathology data from the Camelyon challenge shows a significant increase in the generalization performance on unseen patients (from 0.839 to 0.864 average AUC, $\text{p-value} = 0,0002$) when the internal representations are controlled by prior knowledge regarding the acquisition center and visual features of the tissue. The code will be shared for reproducibility on our GitHub repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge