Group Equivariant Generative Adversarial Networks

Paper and Code

May 04, 2020

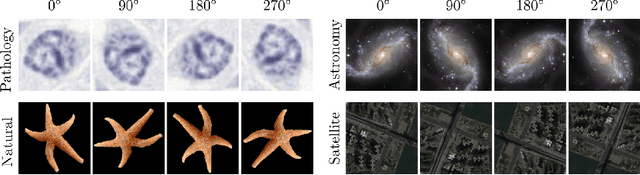

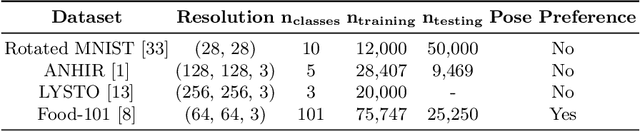

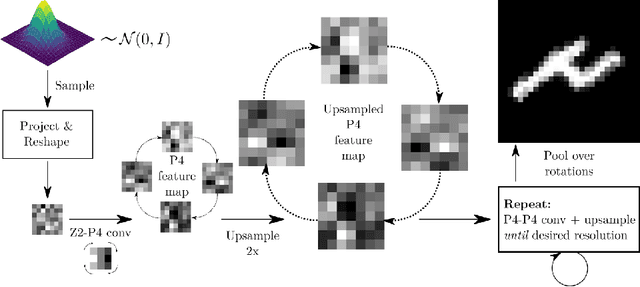

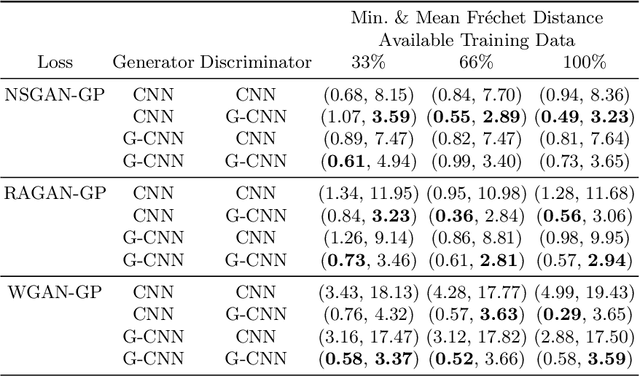

Generative adversarial networks are the state of the art for generative modeling in vision, yet are notoriously unstable in practice. This instability is further exacerbated with limited training data. However, in the synthesis of domains such as medical or satellite imaging, it is often overlooked that the image label is invariant to global image symmetries (e.g., rotations and reflections). In this work, we improve gradient feedback between generator and discriminator using an inductive symmetry prior via group-equivariant convolutional networks. We replace convolutional layers with equivalent group-convolutional layers in both generator and discriminator, allowing for better optimization steps and increased expressive power with limited samples. In the process, we extend recent GAN developments to the group-equivariant setting. We demonstrate the utility of our methods by improving both sample fidelity and diversity in the class-conditional synthesis of a diverse set of globally-symmetric imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge