Group Based Deep Shared Feature Learning for Fine-grained Image Classification

Paper and Code

Apr 04, 2020

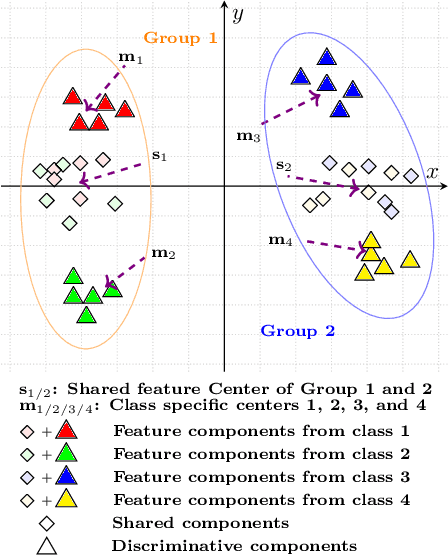

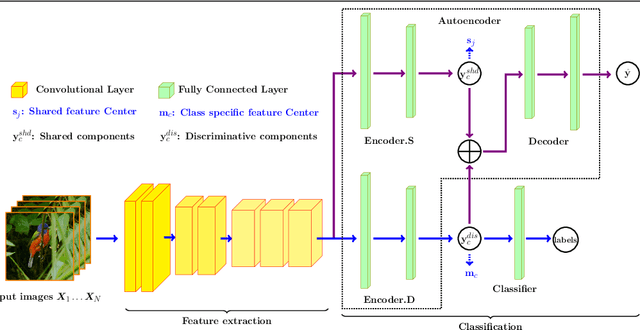

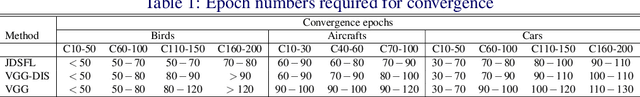

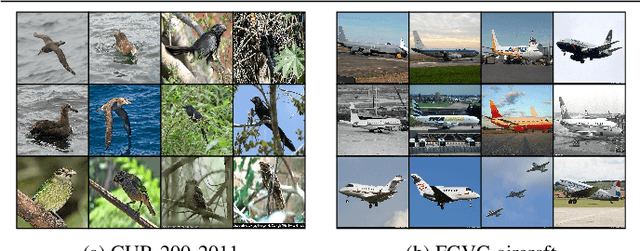

Fine-grained image classification has emerged as a significant challenge because objects in such images have small inter-class visual differences but with large variations in pose, lighting, and viewpoints, etc. Most existing work focuses on highly customized feature extraction via deep network architectures which have been shown to deliver state of the art performance. Given that images from distinct classes in fine-grained classification share significant features of interest, we present a new deep network architecture that explicitly models shared features and removes their effect to achieve enhanced classification results. Our modeling of shared features is based on a new group based learning wherein existing classes are divided into groups and multiple shared feature patterns are discovered (learned). We call this framework Group based deep Shared Feature Learning (GSFL) and the resulting learned network as GSFL-Net. Specifically, the proposed GSFL-Net develops a specially designed autoencoder which is constrained by a newly proposed Feature Expression Loss to decompose a set of features into their constituent shared and discriminative components. During inference, only the discriminative feature component is used to accomplish the classification task. A key benefit of our specialized autoencoder is that it is versatile and can be combined with state-of-the-art fine-grained feature extraction models and trained together with them to improve their performance directly. Experiments on benchmark datasets show that GSFL-Net can enhance classification accuracy over the state of the art with a more interpretable architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge