Graph Reinforcement Learning for Predictive Power Allocation to Mobile Users

Paper and Code

Mar 08, 2022

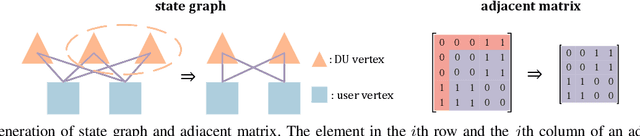

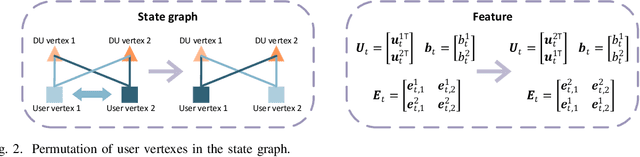

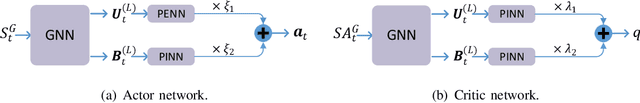

Allocating resources with future channels can save resource to ensure quality-of-service of video streaming. In this paper, we optimize predictive power allocation to minimize the energy consumed at distributed units (DUs) by using deep deterministic policy gradient (DDPG) to find optimal policy and predict average channel gains. To improve training efficiency, we resort to graph DDPG for exploiting two kinds of relational priors: (a) permutation equivariant (PE) and permutation invariant (PI) properties of policy function and action-value function, (b) topology relation among users and DUs. To design graph DDPG framework more systematically in harnessing the priors, we first demonstrate how to transform matrix-based DDPG into graph-based DDPG. Then, we respectively design the actor and critic networks to satisfy the permutation properties when graph neural networks are used in embedding and end to-end manners. To avoid destroying the PE/PI properties of the actor and critic networks, we conceive a batch normalization method. Finally, we show the impact of leveraging each prior. Simulation results show that the learned predictive policy performs close to the optimal solution with perfect future information, and the graph DDPG algorithms converge much faster than existing DDPG algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge