GPR-based Model Reconstruction System for Underground Utilities Using GPRNet

Paper and Code

Nov 05, 2020

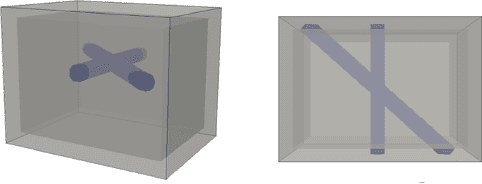

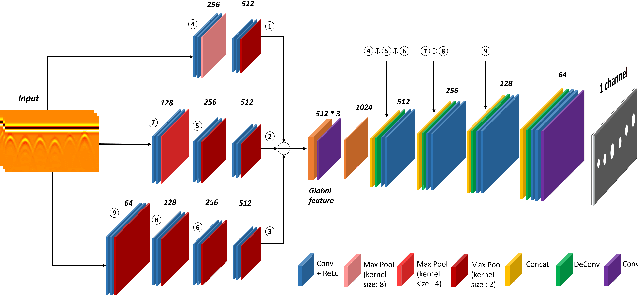

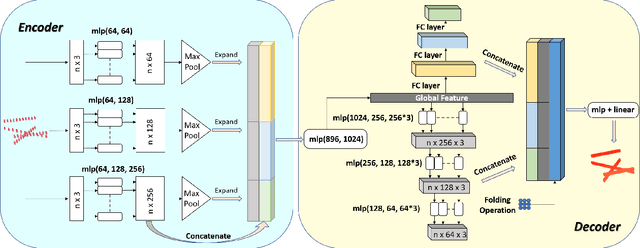

Ground Penetrating Radar (GPR) is one of the most important non-destructive evaluation (NDE) instruments to detect and locate underground objects (i.e. rebars, utility pipes). Many of the previous researches focus on GPR image-based feature detection only, and none can process sparse GPR measurements to successfully reconstruct a very fine and detailed 3D model of underground objects for better visualization. To address this problem, this paper presents a novel robotic system to collect GPR data, localize the underground utilities, and reconstruct the underground objects' dense point cloud model. This system is composed of three modules: 1) visual-inertial-based GPR data collection module which tags the GPR measurements with positioning information provided by an omnidirectional robot; 2) a deep neural network (DNN) migration module to interpret the raw GPR B-scan image into a cross-section of object model; 3) a DNN-based 3D reconstruction module, i.e., GPRNet, to generate underground utility model with the fine 3D point cloud. The experiments show that our method can generate a dense and complete point cloud model of pipe-shaped utilities based on a sparse input, i.e., GPR raw data, with various levels of incompleteness and noise. The experiment results on synthetic data as well as field test data verified the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge