Genetic programming approaches to learning fair classifiers

Paper and Code

Apr 28, 2020

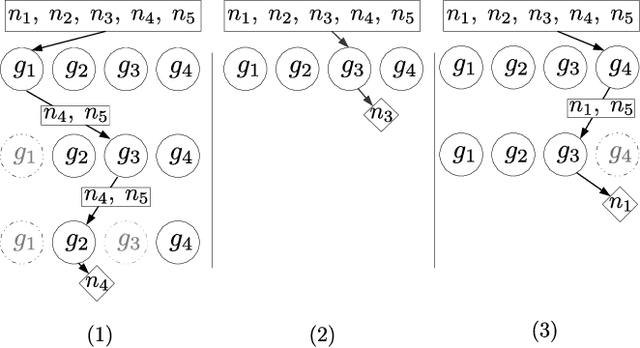

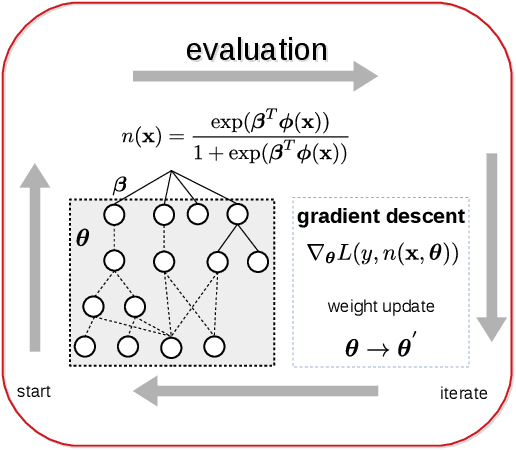

Society has come to rely on algorithms like classifiers for important decision making, giving rise to the need for ethical guarantees such as fairness. Fairness is typically defined by asking that some statistic of a classifier be approximately equal over protected groups within a population. In this paper, current approaches to fairness are discussed and used to motivate algorithmic proposals that incorporate fairness into genetic programming for classification. We propose two ideas. The first is to incorporate a fairness objective into multi-objective optimization. The second is to adapt lexicase selection to define cases dynamically over intersections of protected groups. We describe why lexicase selection is well suited to pressure models to perform well across the potentially infinitely many subgroups over which fairness is desired. We use a recent genetic programming approach to construct models on four datasets for which fairness constraints are necessary, and empirically compare performance to prior methods utilizing game-theoretic solutions. Methods are assessed based on their ability to generate trade-offs of subgroup fairness and accuracy that are Pareto optimal. The result show that genetic programming methods in general, and random search in particular, are well suited to this task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge