Generative Well-intentioned Networks

Paper and Code

Oct 28, 2019

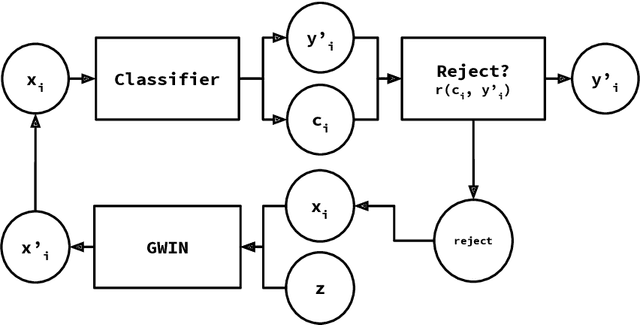

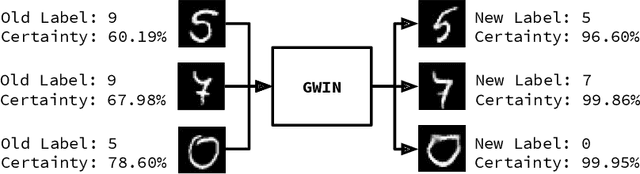

We propose Generative Well-intentioned Networks (GWINs), a novel framework for increasing the accuracy of certainty-based, closed-world classifiers. A conditional generative network recovers the distribution of observations that the classifier labels correctly with high certainty. We introduce a reject option to the classifier during inference, allowing the classifier to reject an observation instance rather than predict an uncertain label. These rejected observations are translated by the generative network to high-certainty representations, which are then relabeled by the classifier. This architecture allows for any certainty-based classifier or rejection function and is not limited to multilayer perceptrons. The capability of this framework is assessed using benchmark classification datasets and shows that GWINs significantly improve the accuracy of uncertain observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge