Generative Residual Attention Network for Disease Detection

Paper and Code

Oct 25, 2021

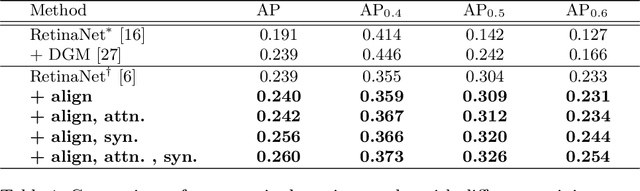

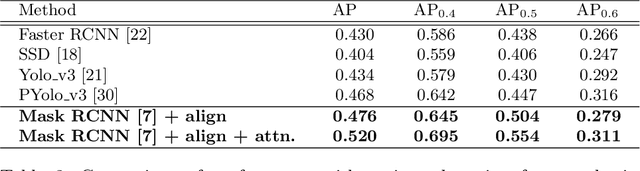

Accurate identification and localization of abnormalities from radiology images serve as a critical role in computer-aided diagnosis (CAD) systems. Building a highly generalizable system usually requires a large amount of data with high-quality annotations, including disease-specific global and localization information. However, in medical images, only a limited number of high-quality images and annotations are available due to annotation expenses. In this paper, we explore this problem by presenting a novel approach for disease generation in X-rays using a conditional generative adversarial learning. Specifically, given a chest X-ray image from a source domain, we generate a corresponding radiology image in a target domain while preserving the identity of the patient. We then use the generated X-ray image in the target domain to augment our training to improve the detection performance. We also present a unified framework that simultaneously performs disease generation and localization.We evaluate the proposed approach on the X-ray image dataset provided by the Radiological Society of North America (RSNA), surpassing the state-of-the-art baseline detection algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge