Generative Design by Reinforcement Learning: Maximizing Diversity of Topology Optimized Designs

Paper and Code

Aug 17, 2020

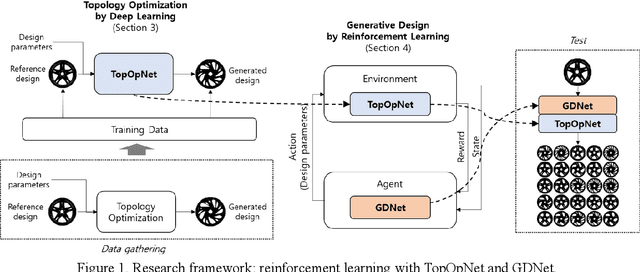

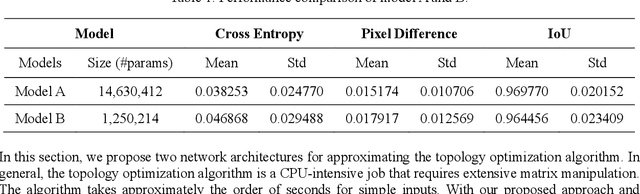

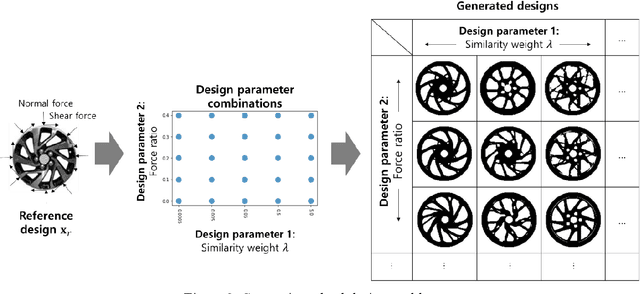

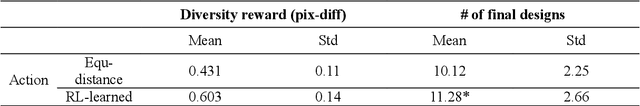

Generative design is a design exploration process in which a large number of structurally optimal designs are generated in parallel by diversifying parameters of the topology optimization while fulfilling certain constraints. Recently, data-driven generative design has gained much attention due to its integration with artificial intelligence (AI) technologies. When generating new designs through a generative approach, one of the important evaluation factors is diversity. In general, the problem definition of topology optimization is diversified by varying the force and boundary conditions, and the diversity of the generated designs is influenced by such parameter combinations. This study proposes a reinforcement learning (RL) based generative design process with reward functions maximizing the diversity of the designs. We formulate the generative design as a sequential problem of finding optimal parameter level values according to a given initial design. Proximal Policy Optimization (PPO) was applied as the learning framework, which is demonstrated in the case study of an automotive wheel design problem. This study also proposes the use of a deep neural network to instantly generate new designs without the topology optimization process, thus reducing the large computational burdens required by reinforcement learning. We show that RL-based generative design produces a large number of diverse designs within a short inference time by exploiting GPU in a fully automated manner. It is different from the previous approach using CPU which takes much more processing time and involving human intervention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge