Generating Unbiased Pseudo-labels via a Theoretically Guaranteed Chebyshev Constraint to Unify Semi-supervised Classification and Regression

Paper and Code

Nov 03, 2023

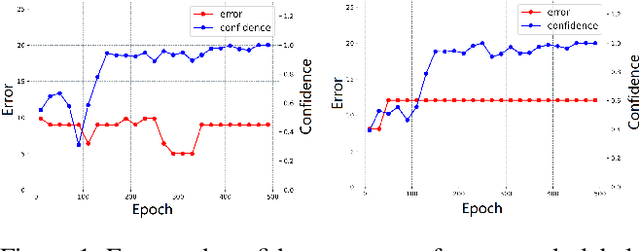

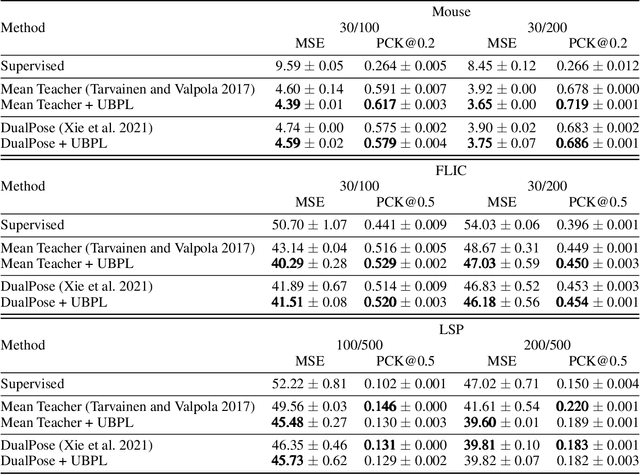

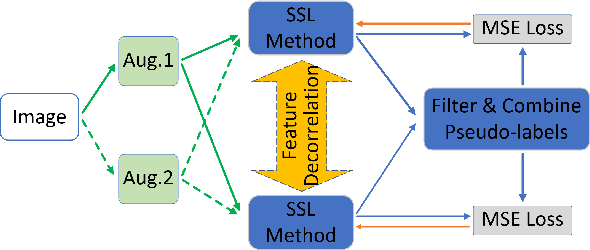

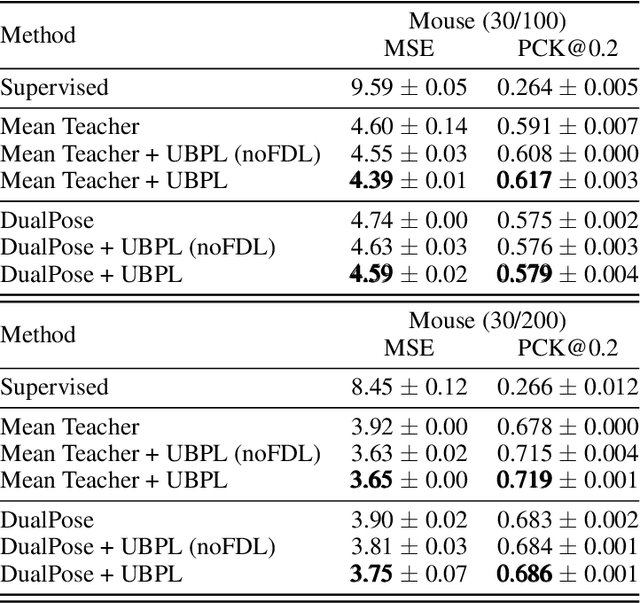

Both semi-supervised classification and regression are practically challenging tasks for computer vision. However, semi-supervised classification methods are barely applied to regression tasks. Because the threshold-to-pseudo label process (T2L) in classification uses confidence to determine the quality of label. It is successful for classification tasks but inefficient for regression tasks. In nature, regression also requires unbiased methods to generate high-quality labels. On the other hand, T2L for classification often fails if the confidence is generated by a biased method. To address this issue, in this paper, we propose a theoretically guaranteed constraint for generating unbiased labels based on Chebyshev's inequality, combining multiple predictions to generate superior quality labels from several inferior ones. In terms of high-quality labels, the unbiased method naturally avoids the drawback of T2L. Specially, we propose an Unbiased Pseudo-labels network (UBPL network) with multiple branches to combine multiple predictions as pseudo-labels, where a Feature Decorrelation loss (FD loss) is proposed based on Chebyshev constraint. In principle, our method can be used for both classification and regression and can be easily extended to any semi-supervised framework, e.g. Mean Teacher, FixMatch, DualPose. Our approach achieves superior performance over SOTAs on the pose estimation datasets Mouse, FLIC and LSP, as well as the classification datasets CIFAR10/100 and SVHN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge