Generalizing Distance Covariance to Measure and Test Multivariate Mutual Dependence

Paper and Code

Feb 25, 2018

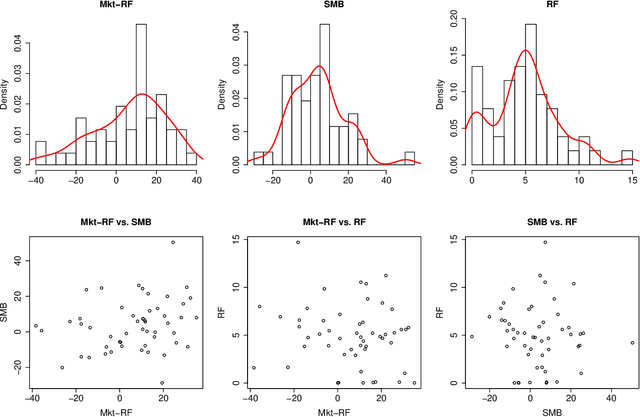

We propose three measures of mutual dependence between multiple random vectors. All the measures are zero if and only if the random vectors are mutually independent. The first measure generalizes distance covariance from pairwise dependence to mutual dependence, while the other two measures are sums of squared distance covariance. All the measures share similar properties and asymptotic distributions to distance covariance, and capture non-linear and non-monotone mutual dependence between the random vectors. Inspired by complete and incomplete V-statistics, we define the empirical measures and simplified empirical measures as a trade-off between the complexity and power when testing mutual independence. Implementation of the tests is demonstrated by both simulation results and real data examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge