GEM: Glare or Gloom, I Can Still See You -- End-to-End Multimodal Object Detector

Paper and Code

Feb 24, 2021

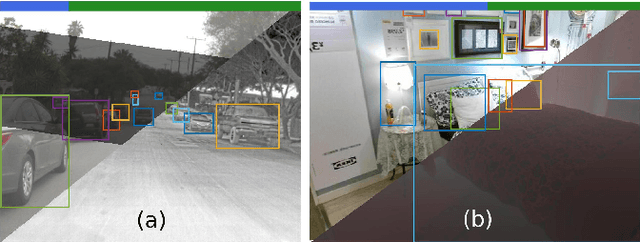

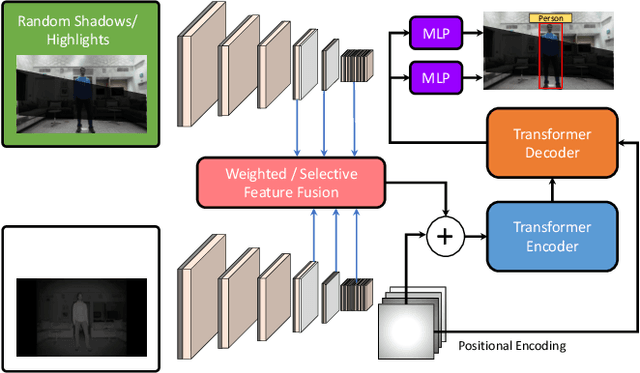

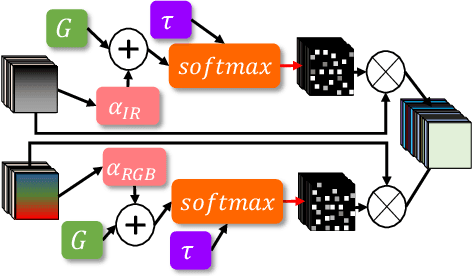

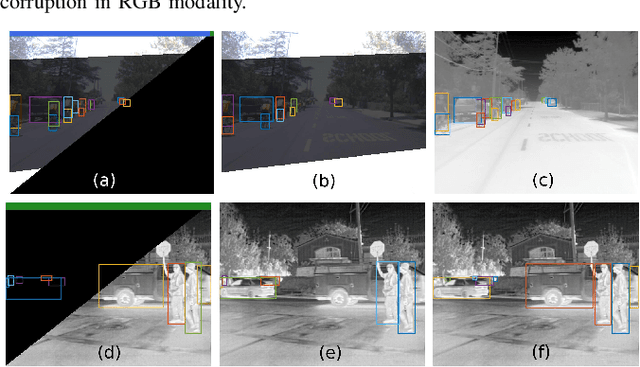

Deep neural networks designed for vision tasks are often prone to failure when they encounter environmental conditions not covered by the training data. Efficient fusion strategies for multi-sensor configurations can enhance the robustness of the detection algorithms by exploiting redundancy from different sensor streams. In this paper, we propose sensor-aware multi-modal fusion strategies for 2D object detection in harsh-lighting conditions. Our network learns to estimate the measurement reliability of each sensor modality in the form of scalar weights and masks, without prior knowledge of the sensor characteristics. The obtained weights are assigned to the extracted feature maps which are subsequently fused and passed to the transformer encoder-decoder network for object detection. This is critical in the case of asymmetric sensor failures and to prevent any tragic consequences. Through extensive experimentation, we show that the proposed strategies out-perform the existing state-of-the-art methods on the FLIR-Thermal dataset, improving the mAP up-to 25.2%. We also propose a new "r-blended" hybrid depth modality for RGB-D multi-modal detection tasks. Our proposed method also obtained promising results on the SUNRGB-D dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge