Gaussian Differential Privacy

Paper and Code

May 30, 2019

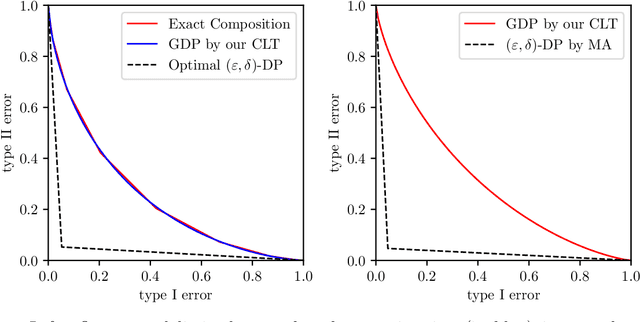

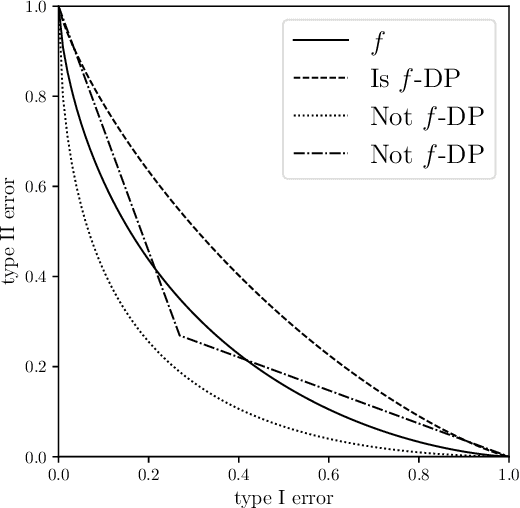

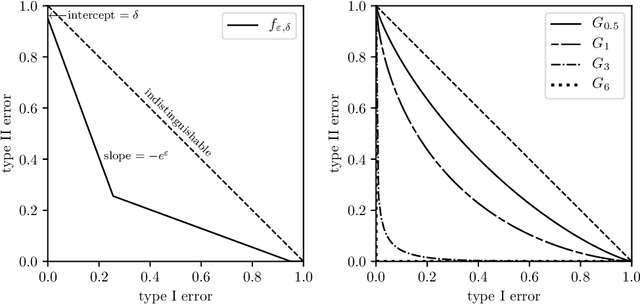

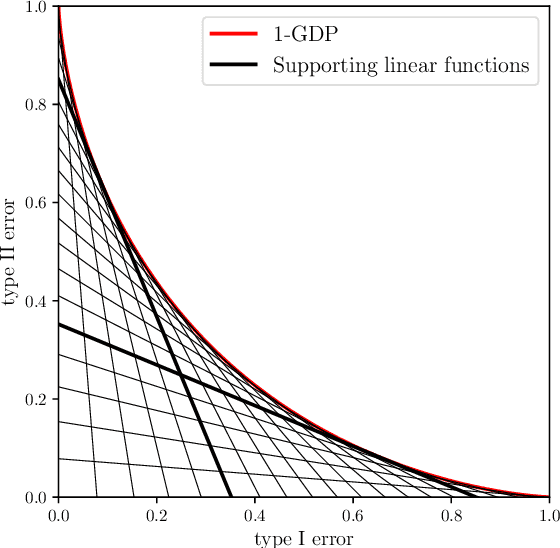

Differential privacy has seen remarkable success as a rigorous and practical formalization of data privacy in the past decade. This privacy definition and its divergence based relaxations, however, have several acknowledged weaknesses, either in handling composition of private algorithms or in analyzing important primitives like privacy amplification by subsampling. Inspired by the hypothesis testing formulation of privacy, this paper proposes a new relaxation, which we term `$f$-differential privacy' ($f$-DP). This notion of privacy has a number of appealing properties and, in particular, avoids difficulties associated with divergence based relaxations. First, $f$-DP preserves the hypothesis testing interpretation. In addition, $f$-DP allows for lossless reasoning about composition in an algebraic fashion. Moreover, we provide a powerful technique to import existing results proven for original DP to $f$-DP and, as an application, obtain a simple subsampling theorem for $f$-DP. In addition to the above findings, we introduce a canonical single-parameter family of privacy notions within the $f$-DP class that is referred to as `Gaussian differential privacy' (GDP), defined based on testing two shifted Gaussians. GDP is focal among the $f$-DP class because of a central limit theorem we prove. More precisely, the privacy guarantees of \emph{any} hypothesis testing based definition of privacy (including original DP) converges to GDP in the limit under composition. The CLT also yields a computationally inexpensive tool for analyzing the exact composition of private algorithms. Taken together, this collection of attractive properties render $f$-DP a mathematically coherent, analytically tractable, and versatile framework for private data analysis. Finally, we demonstrate the use of the tools we develop by giving an improved privacy analysis of noisy stochastic gradient descent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge