Free-form Lesion Synthesis Using a Partial Convolution Generative Adversarial Network for Enhanced Deep Learning Liver Tumor Segmentation

Paper and Code

Jun 18, 2022

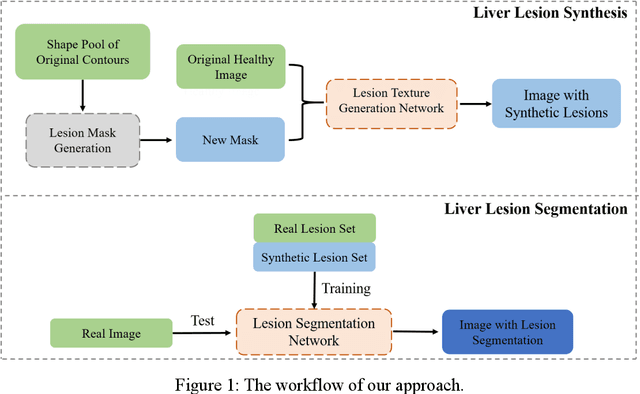

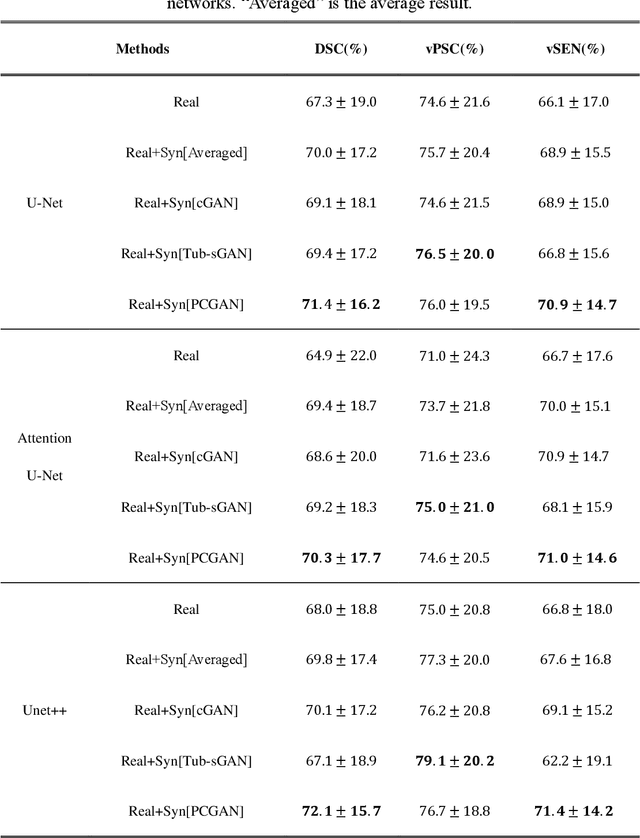

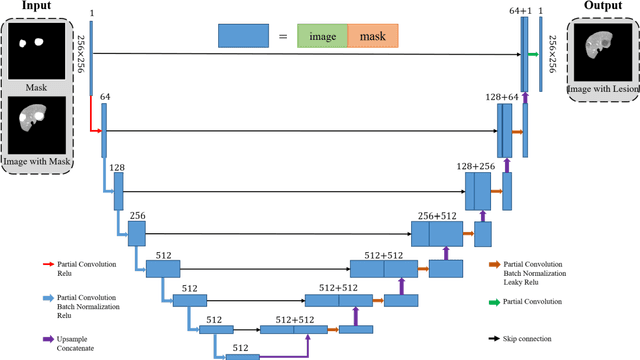

Automatic deep learning segmentation models has been shown to improve both the segmentation efficiency and the accuracy. However, training a robust segmentation model requires considerably large labeled training samples, which may be impractical. This study aimed to develop a deep learning framework for generating synthetic lesions that can be used to enhance network training. The lesion synthesis network is a modified generative adversarial network (GAN). Specifically, we innovated a partial convolution strategy to construct an Unet-like generator. The discriminator is designed using Wasserstein GAN with gradient penalty and spectral normalization. A mask generation method based on principal component analysis was developed to model various lesion shapes. The generated masks are then converted into liver lesions through a lesion synthesis network. The lesion synthesis framework was evaluated for lesion textures, and the synthetic lesions were used to train a lesion segmentation network to further validate the effectiveness of this framework. All the networks are trained and tested on the public dataset from LITS. The synthetic lesions generated by the proposed approach have very similar histogram distributions compared to the real lesions for the two employed texture parameters, GLCM-energy and GLCM-correlation. The Kullback-Leibler divergence of GLCM-energy and GLCM-correlation were 0.01 and 0.10, respectively. Including the synthetic lesions in the tumor segmentation network improved the segmentation dice performance of U-Net significantly from 67.3% to 71.4% (p<0.05). Meanwhile, the volume precision and sensitivity improve from 74.6% to 76.0% (p=0.23) and 66.1% to 70.9% (p<0.01), respectively. The synthetic data significantly improves the segmentation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge