ForMIC: Foraging via Multiagent RL with Implicit Communication

Paper and Code

Jun 15, 2020

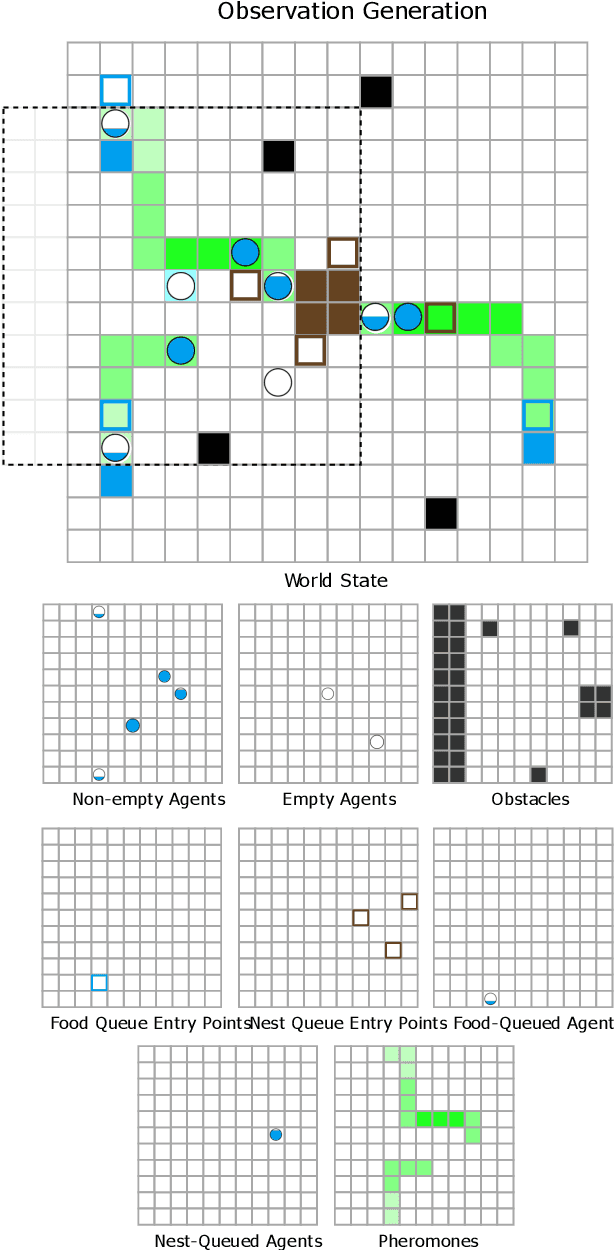

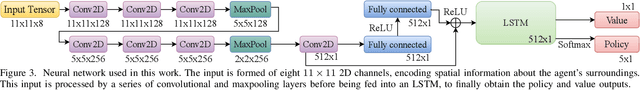

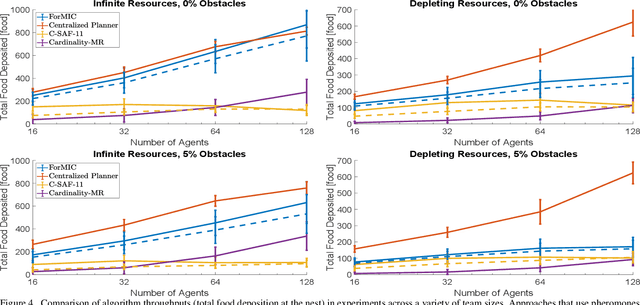

Multi-agent foraging (MAF) involves distributing a team of agents to search an environment and extract resources from it. Many foraging algorithms use biologically-inspired signaling mechanisms, such as pheromones, to help agents navigate from resources back to a central nest while relying on local sensing only. However, these approaches often rely on predictable pheromone dynamics and/or perfect robot localization. In nature, certain environmental factors (e.g., heat or rain) can disturb or destroy pheromone trails, while imperfect sensing can lead robots astray. In this work, we propose ForMIC, a distributed reinforcement learning MAF approach that relies on pheromones as a way to endow agents with implicit communication abilities via their shared environment. Specifically, full agents involuntarily lay trails of pheromones as they move; other agents can then measure the local levels of pheromones to guide their individual decisions. We show how these stigmergic interactions among agents can lead to a highly-scalable, decentralized MAF policy that is naturally resilient to common environmental disturbances, such as depleting resources and sudden pheromone disappearance. We present simulation results that compare our learning policy against existing state-of-the-art MAF algorithms, in a set of experiments varying team sizes, number and placement of resources, and key environmental disturbances. Our results demonstrate that our learned policy outperforms these baselines, approaching the performance of a planner with full observability and centralized agent allocation. Preprint of the paper submitted to the IEEE Transactions on Robotics (T-RO) journal's special issue on Resilience in Networked Robotic Systems in June 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge