Forecasting Loss of Signal in Optical Networks with Machine Learning

Paper and Code

Jan 08, 2022

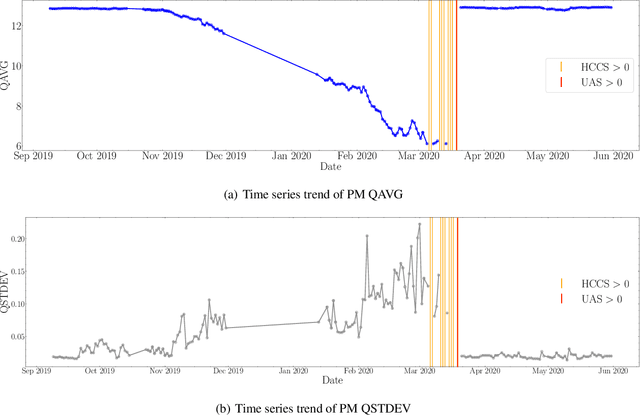

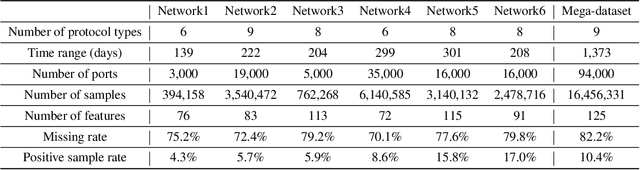

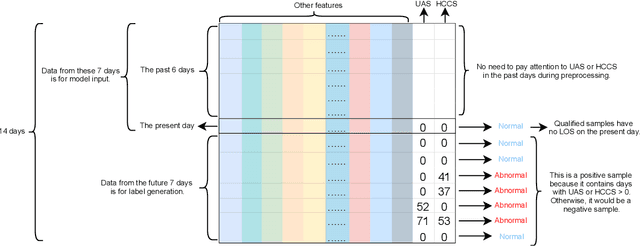

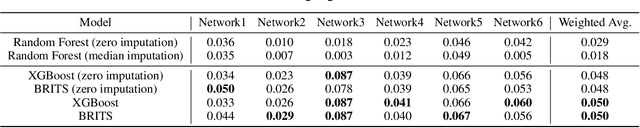

Loss of Signal (LOS) represents a significant cost for operators of optical networks. By studying large sets of real-world Performance Monitoring (PM) data collected from six international optical networks, we find that it is possible to forecast LOS events with good precision 1-7 days before they occur, albeit at relatively low recall, with supervised machine learning (ML). Our study covers twelve facility types, including 100G lines and ETH10G clients. We show that the precision for a given network improves when training on multiple networks simultaneously relative to training on an individual network. Furthermore, we show that it is possible to forecast LOS from all facility types and all networks with a single model, whereas fine-tuning for a particular facility or network only brings modest improvements. Hence our ML models remain effective for optical networks previously unknown to the model, which makes them usable for commercial applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge