Flexible Portrait Image Editing with Fine-Grained Control

Paper and Code

Apr 04, 2022

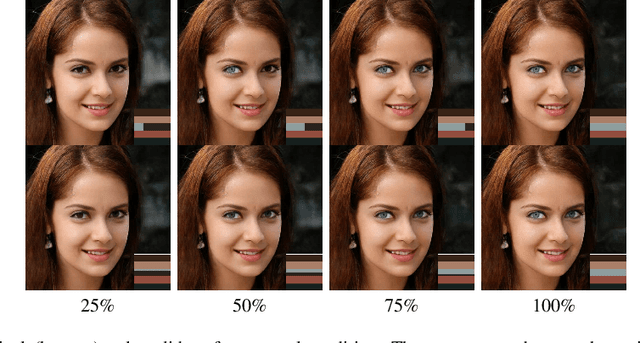

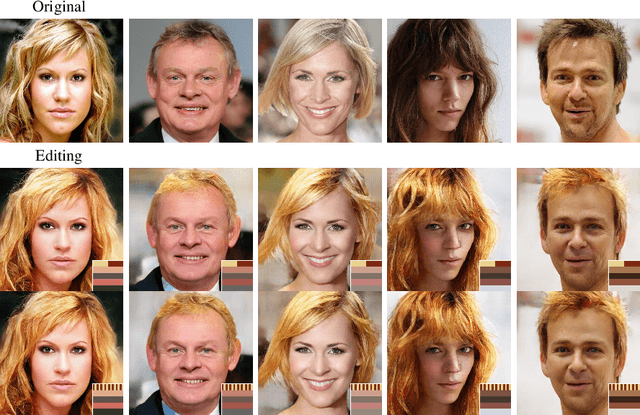

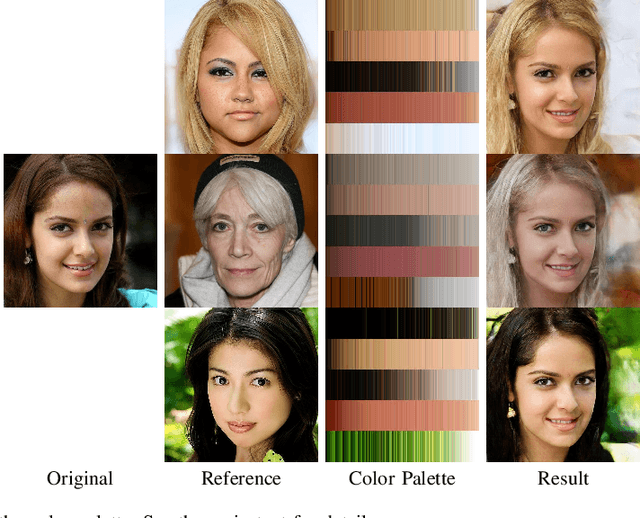

We develop a new method for portrait image editing, which supports fine-grained editing of geometries, colors, lights and shadows using a single neural network model. We adopt a novel asymmetric conditional GAN architecture: the generators take the transformed conditional inputs, such as edge maps, color palette, sliders and masks, that can be directly edited by the user; the discriminators take the conditional inputs in the way that can guide controllable image generation more effectively. Taking color editing as an example, we feed color palettes (which can be edited easily) into the generator, and color maps (which contain positional information of colors) into the discriminator. We also design a region-weighted discriminator so that higher weights are assigned to more important regions, like eyes and skin. Using a color palette, the user can directly specify the desired colors of hair, skin, eyes, lip and background. Color sliders allow the user to blend colors in an intuitive manner. The user can also edit lights and shadows by modifying the corresponding masks. We demonstrate the effectiveness of our method by evaluating it on the CelebAMask-HQ dataset with a wide range of tasks, including geometry/color/shadow/light editing, hand-drawn sketch to image translation, and color transfer. We also present ablation studies to justify our design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge