Flexible, Non-parametric Modeling Using Regularized Neural Networks

Paper and Code

Dec 18, 2020

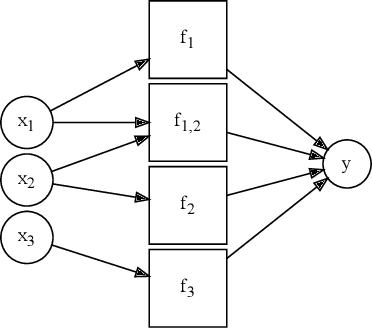

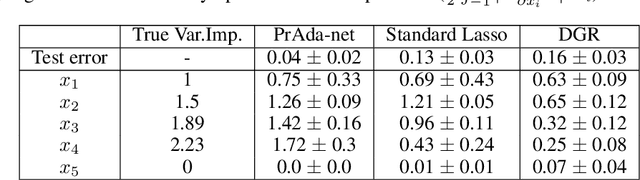

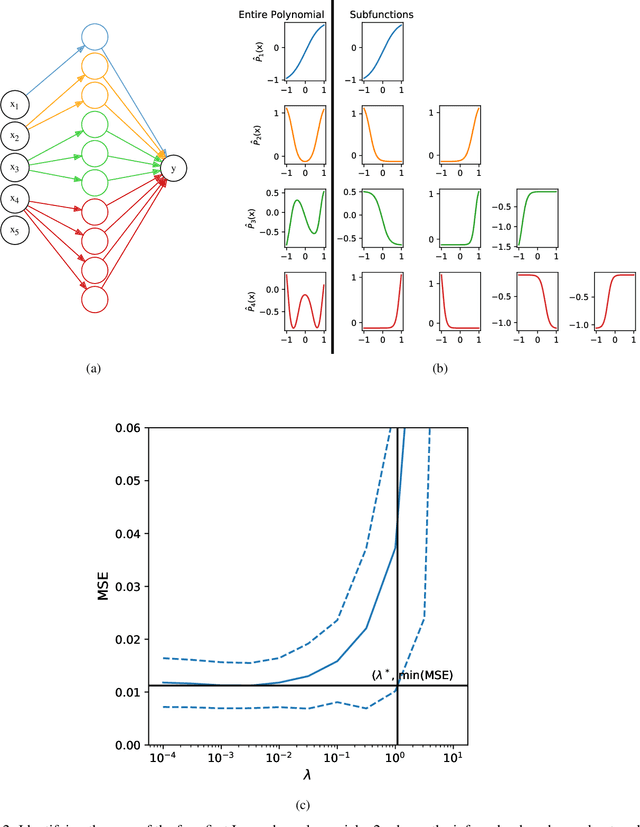

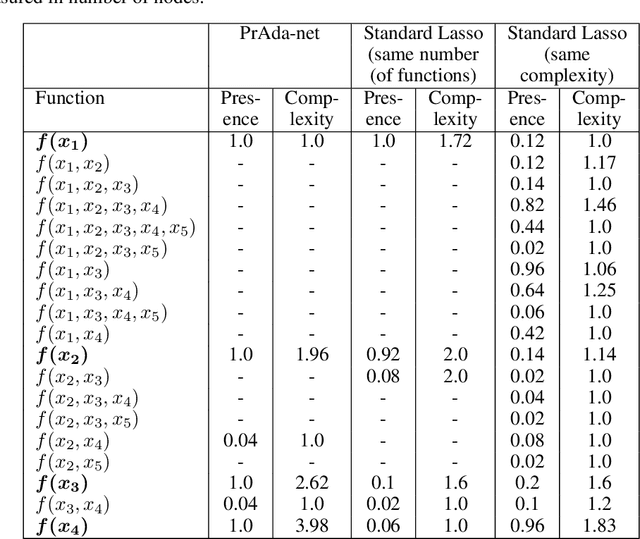

Neural networks excel in terms of predictive performance, with little or no need for manual screening of variables or guided definition of network architecture. However, these flexible and data adaptive models are often difficult to interpret. Here, we propose a new method for enhancing interpretability, that builds on proximal gradient descent and adaptive lasso, PrAda-net. In contrast to other lasso-based algorithms, PrAda-net penalizes all network links individually and, by removing links with smaller weights, automatically adjusts the size of the neural network to capture the complexity of the underlying data generative model, thus increasing interpretability. In addition, the compact network obtained by PrAda-net can be used to identify relevant dependencies in the data, making it suitable for non-parametric statistical modelling with automatic model selection. We demonstrate PrAda-net on simulated data, where we compare the test error performance, variable importance and variable subset identification properties of PrAda-net to other lasso-based approaches. We also apply Prada-net to the massive U.K.\ black smoke data set, to demonstrate the capability of using Prada-net as an alternative to generalized additive models (GAMs), which often require domain knowledge to select the functional forms of the additive components. Prada-net, in contrast, requires no such pre-selection while still resulting in interpretable additive components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge