Finite-Time Analysis of Asynchronous Q-learning under Diminishing Step-Size from Control-Theoretic View

Paper and Code

Jul 25, 2022

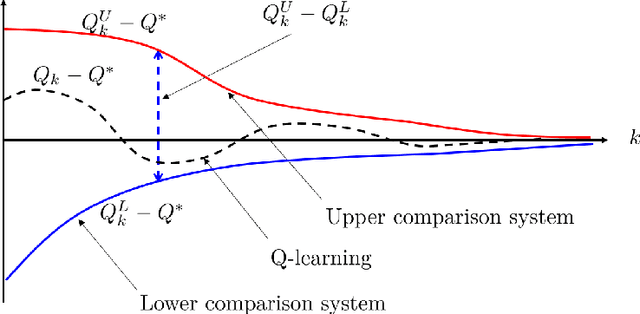

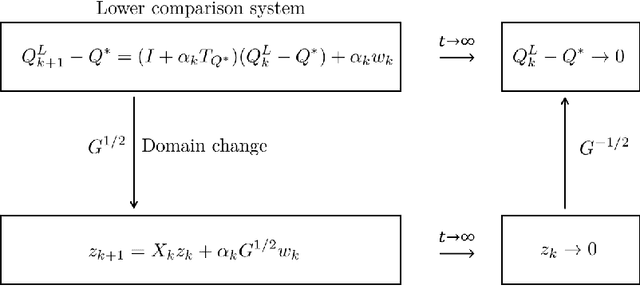

Q-learning has long been one of the most popular reinforcement learning algorithms, and theoretical analysis of Q-learning has been an active research topic for decades. Although researches on asymptotic convergence analysis of Q-learning have a long tradition, non-asymptotic convergence has only recently come under active study. The main goal of this paper is to investigate new finite-time analysis of asynchronous Q-learning under Markovian observation models via a control system viewpoint. In particular, we introduce a discrete-time time-varying switching system model of Q-learning with diminishing step-sizes for our analysis, which significantly improves recent development of the switching system analysis with constant step-sizes, and leads to \(\mathcal{O}\left( \sqrt{\frac{\log k}{k}} \right)\) convergence rate that is comparable to or better than most of the state of the art results in the literature. In the mean while, a technique using the similarly transformation is newly applied to avoid the difficulty in the analysis posed by diminishing step-sizes. The proposed analysis brings in additional insights, covers different scenarios, and provides new simplified templates for analysis to deepen our understanding on Q-learning via its unique connection to discrete-time switching systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge