Fine-grained Event Learning of Human-Object Interaction with LSTM-CRF

Paper and Code

Sep 30, 2017

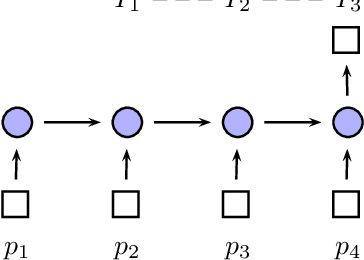

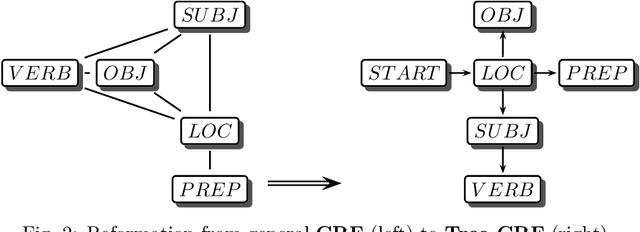

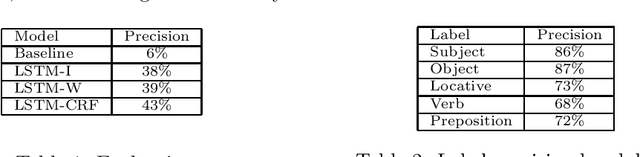

Event learning is one of the most important problems in AI. However, notwithstanding significant research efforts, it is still a very complex task, especially when the events involve the interaction of humans or agents with other objects, as it requires modeling human kinematics and object movements. This study proposes a methodology for learning complex human-object interaction (HOI) events, involving the recording, annotation and classification of event interactions. For annotation, we allow multiple interpretations of a motion capture by slicing over its temporal span, for classification, we use Long-Short Term Memory (LSTM) sequential models with Conditional Randon Field (CRF) for constraints of outputs. Using a setup involving captures of human-object interaction as three dimensional inputs, we argue that this approach could be used for event types involving complex spatio-temporal dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge