FIND:Explainable Framework for Meta-learning

Paper and Code

May 20, 2022

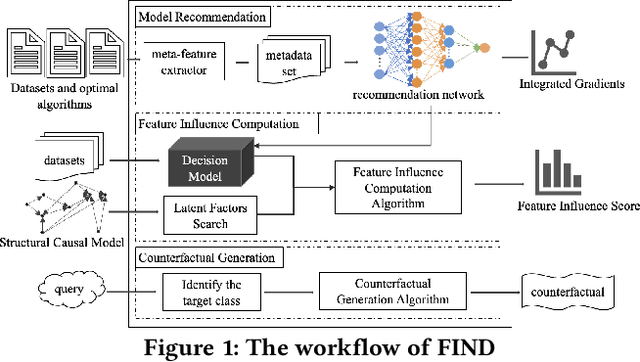

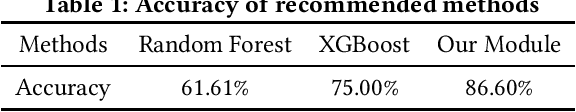

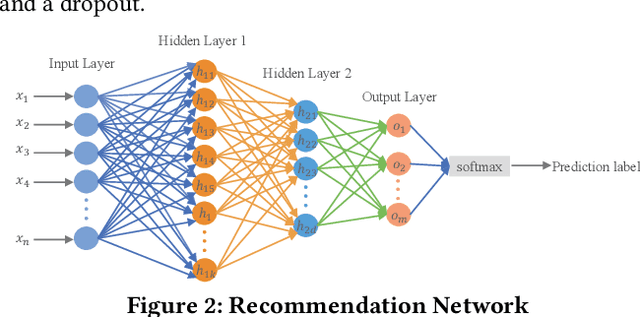

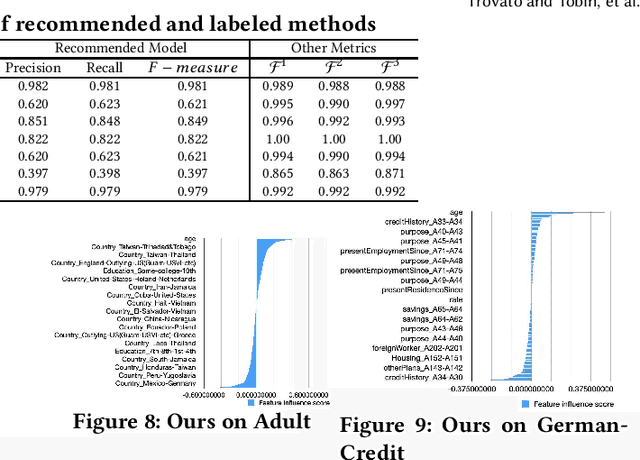

Meta-learning is used to efficiently enable the automatic selection of machine learning models by combining data and prior knowledge. Since the traditional meta-learning technique lacks explainability, as well as shortcomings in terms of transparency and fairness, achieving explainability for meta-learning is crucial. This paper proposes FIND, an interpretable meta-learning framework that not only can explain the recommendation results of meta-learning algorithm selection, but also provide a more complete and accurate explanation of the recommendation algorithm's performance on specific datasets combined with business scenarios. The validity and correctness of this framework have been demonstrated by extensive experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge