Few-shot Learning with Contextual Cueing for Object Recognition in Complex Scenes

Paper and Code

Dec 17, 2019

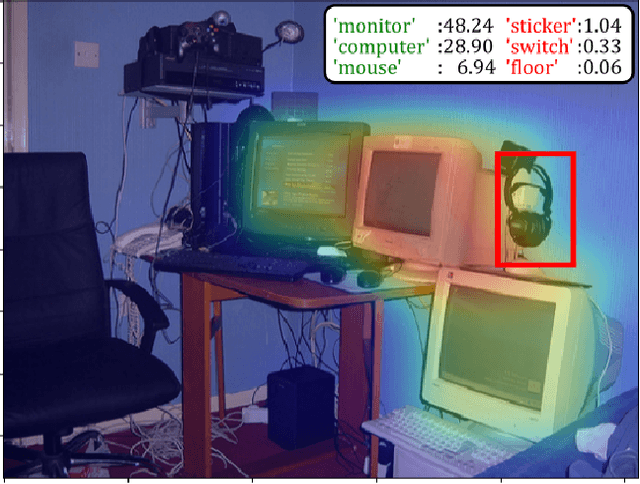

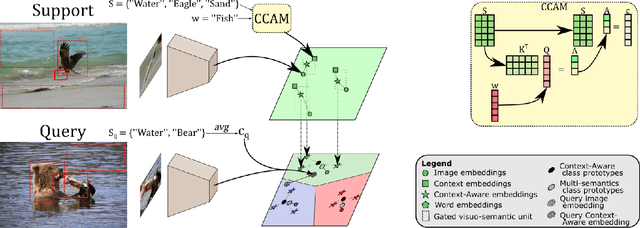

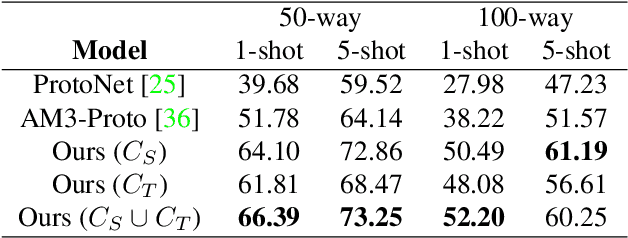

Few-shot Learning aims to recognize new concepts from a small number of training examples. Recent work mainly tackle this problem by improving visual features, feature transfer and meta-training algorithms. In this work, we propose to explore a complementary direction by using scene context semantics to learn and recognize new concepts more easily. Whereas a few visual examples cannot cover all intra-class variations, contextual cueing offers a complementary signal to classify instances with unseen features or ambiguous objects. More specifically, we propose a Class-conditioned Context Attention Module (CCAM) that learns to weight the most important context elements while learning a particular concept. We additionally propose a flexible gating mechanism to ground visual class representations in context semantics. We conduct extensive experiments on Visual Genome dataset, and we show that compared to a visual-only baseline, our model improves top-1 accuracy by 20.47% and 9.13% in 5-way 1-shot and 5-way 5-shot, respectively; and by 20.42% and 12.45% in 20-way 1-shot and 20-way 5-shot, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge