FedQNN: Federated Learning using Quantum Neural Networks

Paper and Code

Mar 16, 2024

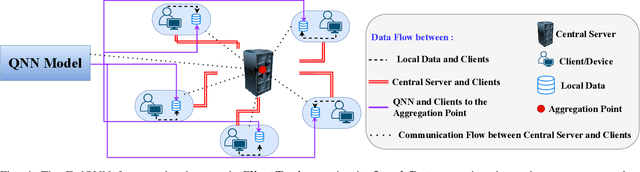

In this study, we explore the innovative domain of Quantum Federated Learning (QFL) as a framework for training Quantum Machine Learning (QML) models via distributed networks. Conventional machine learning models frequently grapple with issues about data privacy and the exposure of sensitive information. Our proposed Federated Quantum Neural Network (FedQNN) framework emerges as a cutting-edge solution, integrating the singular characteristics of QML with the principles of classical federated learning. This work thoroughly investigates QFL, underscoring its capability to secure data handling in a distributed environment and facilitate cooperative learning without direct data sharing. Our research corroborates the concept through experiments across varied datasets, including genomics and healthcare, thereby validating the versatility and efficacy of our FedQNN framework. The results consistently exceed 86% accuracy across three distinct datasets, proving its suitability for conducting various QML tasks. Our research not only identifies the limitations of classical paradigms but also presents a novel framework to propel the field of QML into a new era of secure and collaborative innovation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge