Federated Multi-Task Learning for Competing Constraints

Paper and Code

Dec 08, 2020

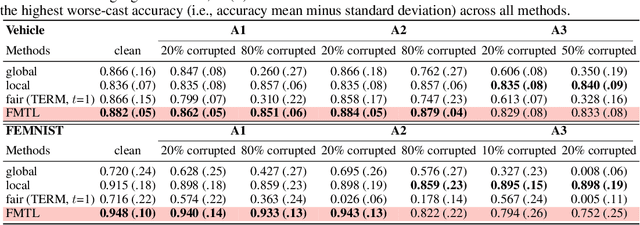

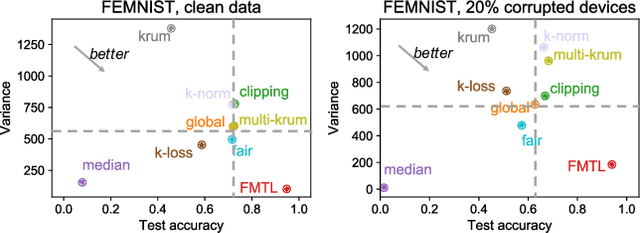

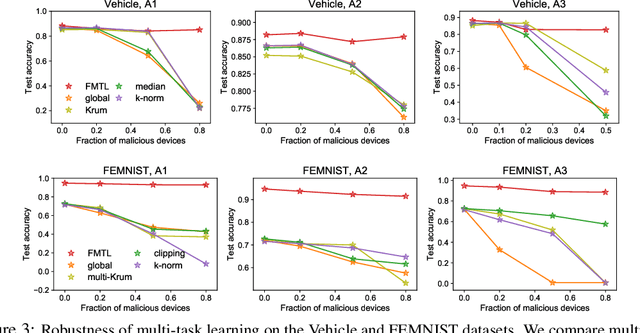

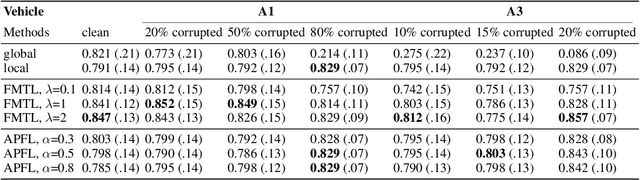

In addition to accuracy, fairness and robustness are two critical concerns for federated learning systems. In this work, we first identify that robustness to adversarial training-time attacks and fairness, measured as the uniformity of performance across devices, are competing constraints in statistically heterogeneous networks. To address these constraints, we propose employing a simple, general multi-task learning objective, and analyze the ability of the objective to achieve a favorable tradeoff between fairness and robustness. We develop a scalable solver for the objective and show that multi-task learning can enable more accurate, robust, and fair models relative to state-of-the-art baselines across a suite of federated datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge